📚 Guide to Implementing Custom Accelerated AI Libraries in SageMaker with oneAPI and Docker

💡 Newskategorie: AI Nachrichten

🔗 Quelle: towardsdatascience.com

Learn how to build custom SageMaker models for Accelerated ML Libraries

AWS provides out-of-box machine-learning images for SageMaker, but what happens when you want to deploy your custom inference and training solution?

This tutorial will explore a specific implementation of custom ML training and inference that leverages daal4py to optimize XGBoost for intel hardware accelerated performance. This article assumes that you are working utilizing SageMaker models and endpoints.

This tutorial is part of a series about building hardware-optimized SageMaker endpoints with the Intel AI Analytics Toolkit. You can find all of the code for this tutorial here.

Configuring Dockerfile and Container Setup

We will use AWS Cloud9 because it already has all the permissions and applications to build our container image. You are welcome to build these on your local machine.

Let’s quickly review the purpose of the critical files in our image. I’ve linked each description to the appropriate file in this article’s GitHub Repo:

- train: This script will contain the program that trains our model. When you build your algorithm, you’ll edit this to include your training code.

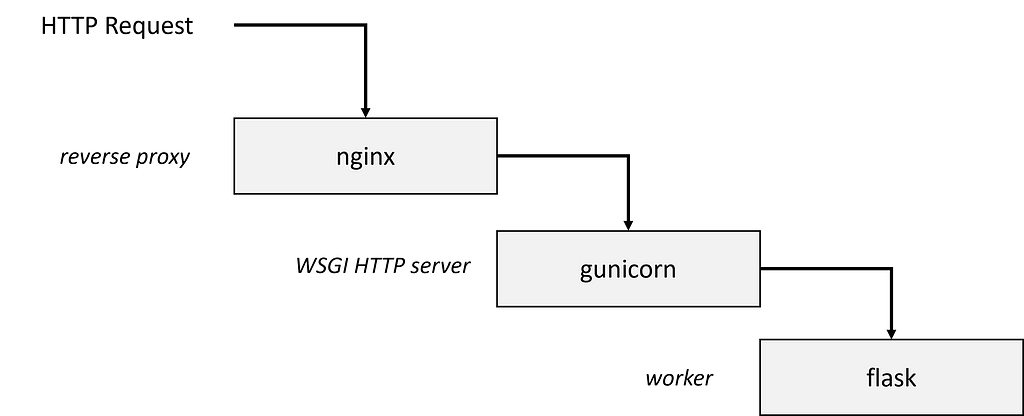

- serve: This script contains a wrapper for the inference server. In most cases, you can use this file as-is.

- wsgi.py: The start-up shell for the individual server workers. This only needs to be changed if you change where predictor.py is located or is named.

- predictor.py: The algorithm-specific inference code. This is the trickiest script because it will need to be heavily adapted to your raw data processing scheme — the ping and invocations functions due to their non-trivial nature. The ping function determines if the container is working and healthy. In this sample container, we declare it healthy if we can load the model successfully. The invocations function is executed when a POST request is made to the SageMaker endpoint.

The ScoringService class includes two methods, get_model and predict. The predict method conditionally converts our trained xgboost model to a daal4py model. Daal4py makes xgboost machine learning algorithm execution faster to gain better performance on the underlying hardware by utilizing the Intel® oneAPI Data Analytics Library (oneDAL).

class ScoringService(object):

model = None # Where we keep the model when it's loaded

@classmethod

def get_model(cls):

"""Get the model object for this instance, loading it if it's not already loaded."""

if cls.model == None:

with open(os.path.join(model_path, "xgboost-model"), "rb") as inp:

cls.model = pickle.load(inp)

return cls.model

@classmethod

def predict(cls, input, daal_opt=False):

"""Receives an input and conditionally optimizes xgboost model using daal4py conversion.

Args:

input (a pandas dataframe): The data on which to do the predictions. There will be

one prediction per row in the dataframe"""

clf = cls.get_model()

if daal_opt:

daal_model = d4p.get_gbt_model_from_xgboost(clf.get_booster())

return d4p.gbt_classification_prediction(nClasses=2, resultsToEvaluate='computeClassProbabilities', fptype='float').compute(input, daal_model).probabilities[:,1]

return clf.predict(input)

- nginx.conf: The configuration for the nginx master server that manages the multiple workers. In most cases, you can use this file as-is.

- requirements.txt: Defines all of the dependencies required by our image.

boto3

flask

gunicorn

numpy==1.21.4

pandas==1.3.5

sagemaker==2.93.0

scikit-learn==0.24.2

xgboost==1.5.0

daal4py==2021.7.1

- Dockerfile: This file is responsible for configuring our custom SageMaker image.

FROM public.ecr.aws/docker/library/python:3.8

# copy requirement file and install python lib

COPY requirements.txt /build/

RUN pip --no-cache-dir install -r /build/requirements.txt

# install programs for proper hosting of our endpoint server

RUN apt-get -y update && apt-get install -y --no-install-recommends \

nginx \

ca-certificates \

&& rm -rf /var/lib/apt/lists/*

# We update PATH so that the train and serve programs are found when the container is invoked.

ENV PATH="/opt/program:${PATH}"

# Set up the program in the image

COPY xgboost_model_code/train /opt/program/train

COPY xgboost_model_code/serve /opt/program/serve

COPY xgboost_model_code/nginx.conf /opt/program/nginx.conf

COPY xgboost_model_code/predictor.py /opt/program/predictor.py

COPY xgboost_model_code/wsgi.py /opt/program/wsgi.py

#set executable permissions for all scripts

RUN chmod +x /opt/program/train

RUN chmod +x /opt/program/serve

RUN chmod +x /opt/program/nginx.conf

RUN chmod +x /opt/program/predictor.py

RUN chmod +x /opt/program/wsgi.py

# set the working directory

WORKDIR /opt/program

Our docker executes the following steps as defined in the Dockerfile install a base image from the AWS public container registry, copy and install the dependencies defined in our requirements.txt file, install programs for hosting our endpoint server, update the location of PATH, copy all relevant files into our image, give all files executable permissions, and set the WORKDIR to /opt/program.

Understanding how SageMaker will use our image

Because we are running the same image in training or hosting, Amazon SageMaker runs your container with the argument train during training and serve when hosting your endpoint. Now let’s unpack exactly what is happening during the training and hosting phases.

Training Phase:

- Your train script is run just like a regular Python program. Several files are laid out for your use under the /opt/ml directory:

/opt/ml

|-- input

| |-- config

| | |-- hyperparameters.json

| |

| `-- data

| `--

| `--

|-- model

| `--

`-- output

`-- failure

- /opt/ml/input/config contains information to control how your program runs. hyperparameters.json is a JSON-formatted dictionary of hyperparameter names to values.

- /opt/ml/input/data/ (for File mode) contains the data for model training.

- /opt/ml/model/ is the directory where you write the model that your algorithm generates. SageMaker will package any files in this directory into a compressed tar archive file.

- /opt/ml/output is a directory where the algorithm can write a file failure that describes why the job failed.

Hosting Phase:

Hosting has a very different model than training because hosting responds to inference requests via HTTP.

SageMaker will target two endpoints in the container:

- /ping will receive GET requests from the infrastructure. Your program returns 200 if the container is up and accepting requests.

- /invocations is the endpoint that receives client inference POST requests. The format of the request and the response is up to the algorithm.

Building Image and Registering to ECR

We will need to make our image available to SageMaker. There are other image registries, but we will use AWS Elastic Container Registry (ECR).

The steps to feed your custom image to a SageMaker pipeline our outlined in the accompanying article: A Detailed Guide for Building Hardware Accelerated MLOps Pipelines in SageMaker

If you need help building your image and pushing it to ECR, follow this tutorial: Creating an ECR Registry and Pushing a Docker Image

Conclusion and Discussion

AWS Sagemaker provides a useful platform for prototyping and deploying machine learning pipelines. However, it isn’t easy to utilize your own custom training/inference code due to the complexity of building the underlying image to SageMaker’s specifications. In this article, we addressed this challenge by teaching you how to:

- How to configure an XGBoost training script

- Configuring Flask APIs for Inference Endpoints

- Converting Models Daal4Py format to Accelerate Inference

- How to Package all of the above into a Docker Image

- How to register our custom SageMaker Image to AWS Elastic Container Registry.

Sources:

- Sagemaker XGBoost container Repo| https://github.com/aws/sagemaker-xgboost-container

- AI Kit SageMaker Templates Repo | https://github.com/eduand-alvarez/ai-kit-sagemaker-templates

Guide to Implementing Custom Accelerated AI Libraries in SageMaker with oneAPI and Docker was originally published in Towards Data Science on Medium, where people are continuing the conversation by highlighting and responding to this story.

...

800+ IT

News

als RSS Feed abonnieren

800+ IT

News

als RSS Feed abonnieren