📚 Stereo Vision System for 3D Tracking

💡 Newskategorie: AI Nachrichten

🔗 Quelle: towardsdatascience.com

Two eyes are all you need

Like the vast majority of sighted animals on this planet, we have two eyes. This marvelous feature of our evolution allows us to see the environment in three dimensions.

To obtain 3D information from a scene, we can mimic binocular vision with at least two cameras working together. Such a setup is called a stereo vision system. When properly calibrated, each camera supplies a constraint on the 3D coordinates of a given feature point. With at least two calibrated cameras, it is possible to calculate the 3D coordinates of the feature point.

In this article, we’ll calibrate a pair of cameras, and use this calibration to compute the 3D coordinates of a feature point tracked in a series of images. You can find the corresponding code in this repository.

Stereo Vision System

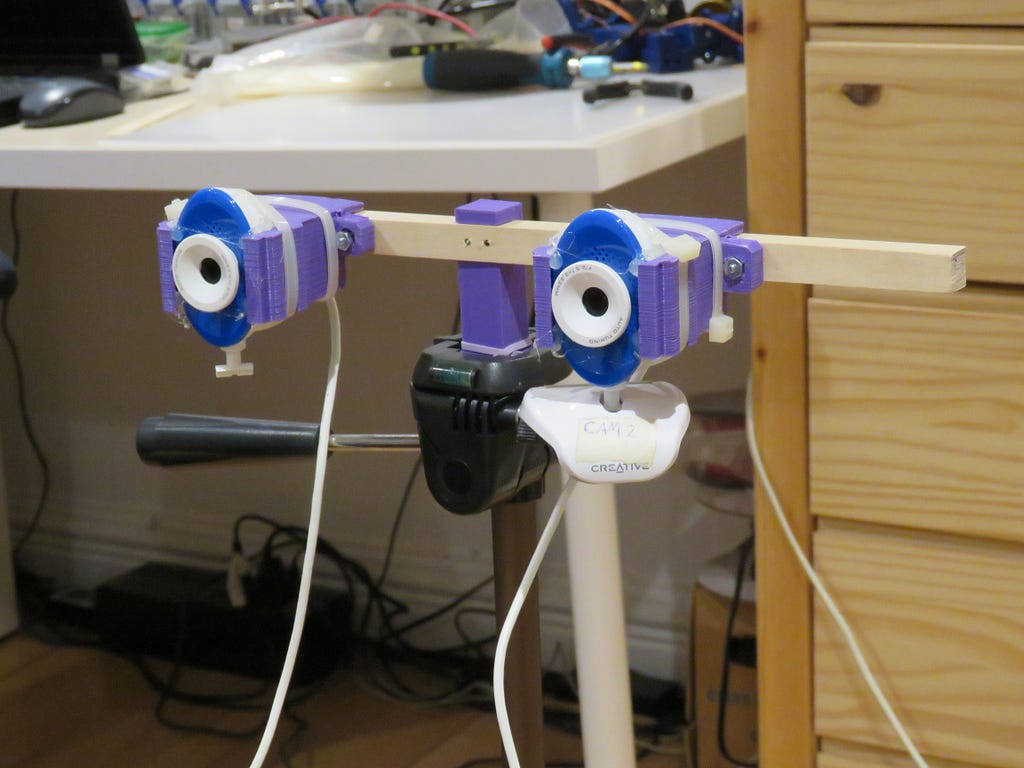

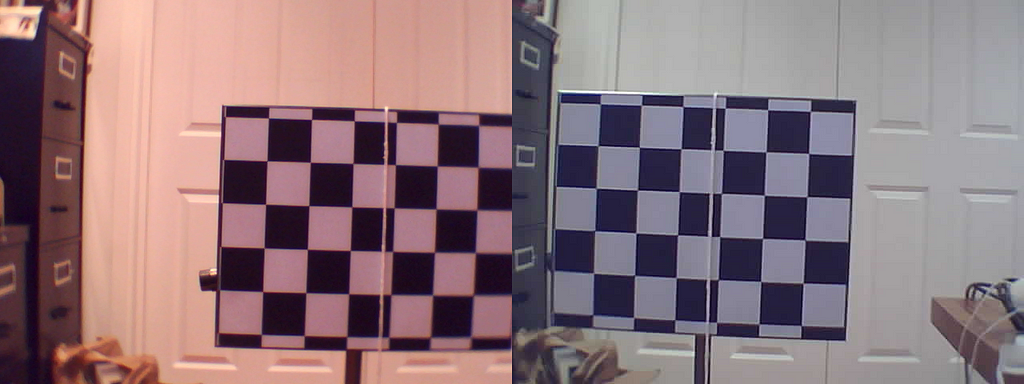

Figure 1 shows the stereo vision system that I have been using. As you can see, nothing fancy: a pair of webcams, rigidly held together with 3D printed parts, tie wraps, and hot glue.

It just happened that I had two webcams of the same model lying around in a drawer, but it is not necessary to have identical cameras. Since the cameras get calibrated independently, they can have different intrinsic parameters and still play their role in a stereo vision system.

Calibration of the Cameras

The Projection Matrix

The calibration of the cameras boils down to computing their projection matrix. The projection matrix of a pinhole camera model is a 3x4 matrix that allows one to calculate the pixel coordinates of a feature point from its 3D coordinates in a world reference frame.

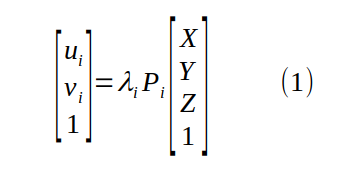

In equation (1), i refers to the camera index. In a system of two cameras, i belongs to {1, 2}.

Pᵢ is the 3x4 projection matrix of camera i.

(uᵢ, vᵢ) are the pixel coordinates of the feature point, as seen from camera i. (X, Y, Z) are the feature point 3D coordinates.

The scalar λᵢ is the scaling factor that preserves the equation homogeneity (i.e. the last element of the vectors on both sides is 1). Its presence comes from the loss of information that happens when a 3D point is projected on a 2D plane (the camera sensor), as multiple 3D points map to the same 2D point.

Assuming we know the projection matrix of the cameras and we have the pixel coordinates of a feature point, our goal is to isolate (X, Y, Z).

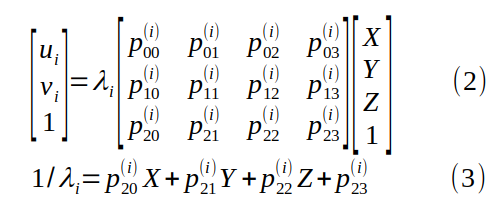

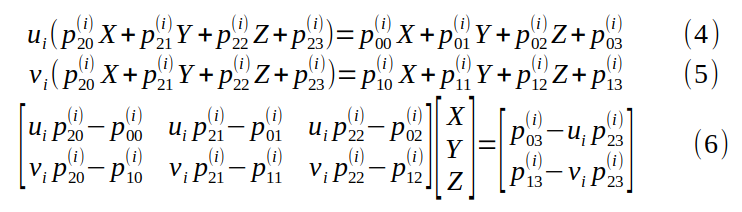

Inserting (3) into the first two rows of (2):

Equation (6) shows that each camera view supplies us with two linear equations in 3 unknowns (X, Y, Z). If we have at least two camera views of the same 3D point, we can compute the 3D coordinates by solving an overdetermined system of linear equations.

Great! But how do we compute the projection matrices?

Computation of the Projection Matrix

To compute the projection matrix of a camera, we need a large number of known 3D points and their corresponding pixel coordinates. We use a checkerboard calibration pattern placed as precisely as possible at measured distances from the stereo rig.

The repository includes the calibration pattern images, for both cameras.

You can run the whole calibration procedure with this python program.

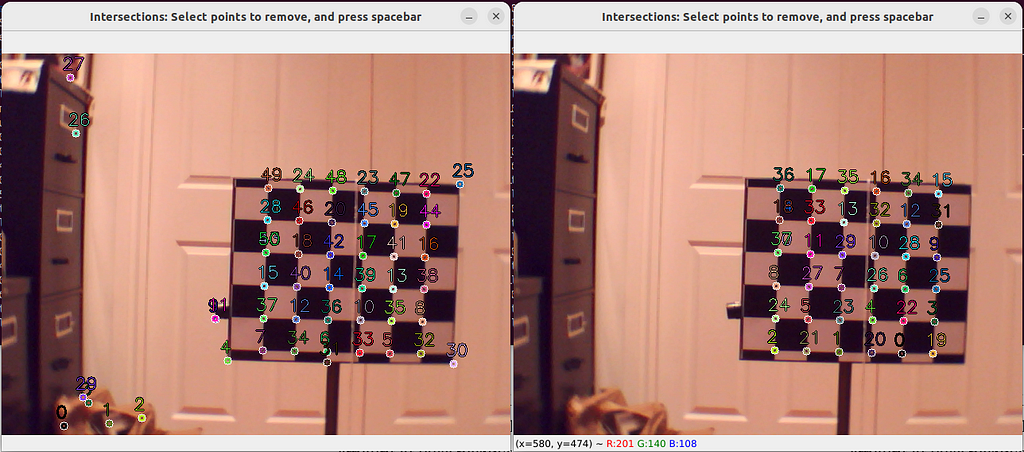

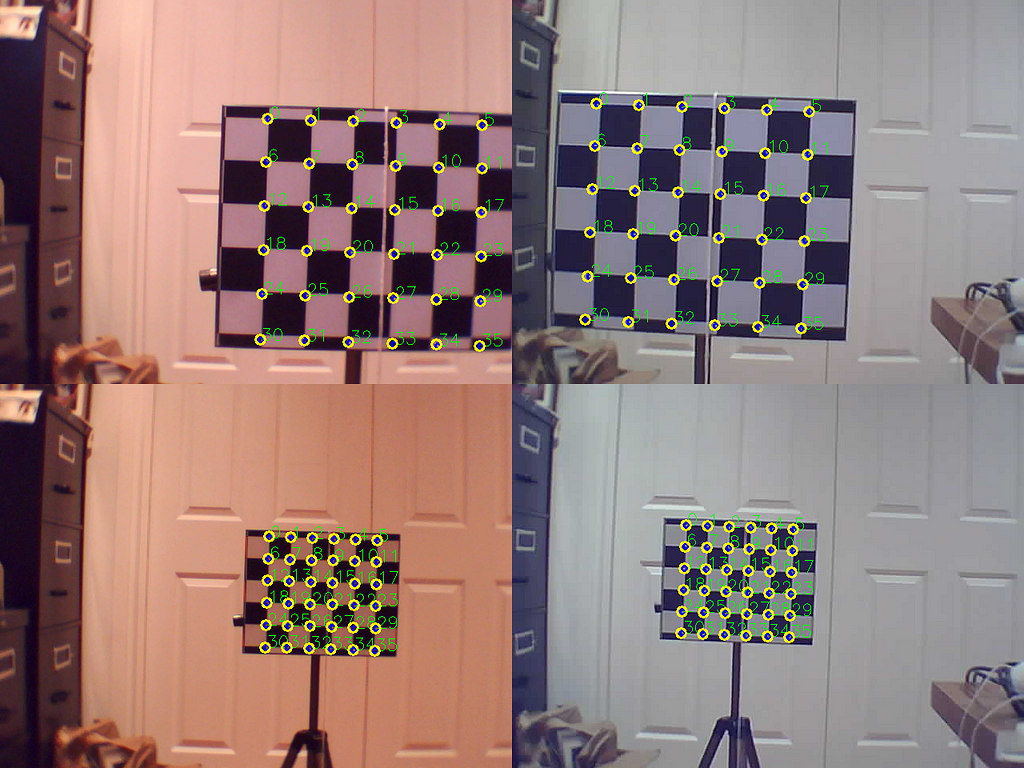

The square intersections in each calibration image get detected with an instance of the class CheckerboardIntersections, which we introduced in my previous article.

In this case, we set the intersection detector parameters to be relatively sensitive, such that all the real intersections are detected, plus a manageable amount of false positives (in other words, perfect recall, reasonable precision). Since the calibration is a process that we’ll only do once, we can afford the work of manually removing the false positives. The program iterates over the calibration pattern images and asks the user to select the false positives.

As we saw in my article about the compensation of camera radial distortion, the raw intersection coordinates must be undistorted. The radial distortion model was previously computed for both cameras, and the corresponding files are already in the repository. The undistorted coordinates are the ones that we’ll use to build the projection matrix of a pinhole camera model.

At this point, we have 7 (captures at different distances) x 6 x 6 (square intersection points) = 252 correspondences between a 3D point and pixel coordinates for each camera.

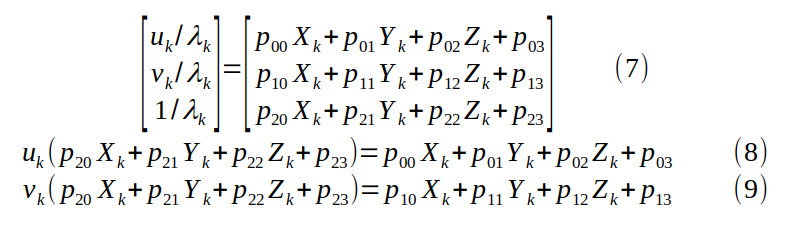

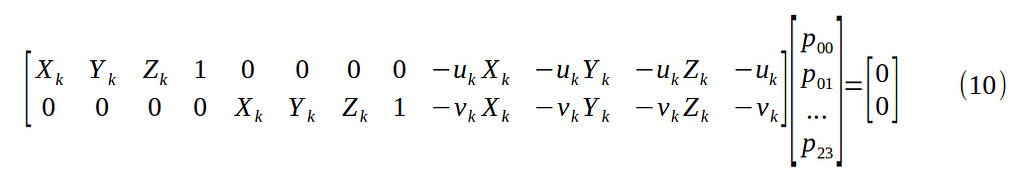

To compute the entries of the projection matrix, we’ll start again with equation (1), but this time assuming we know (u, v)ₖ and (X, Y, Z)ₖ, and we want to solve for the entries of P. The subscript k refers to the index of the pair (pixel_coords, XYZ_world). The scalars λₖ (one for each point) are also unknown. We can eliminate the λₖ from the system of linear equations with a bit of manipulation:

Equations (8) and (9) can get written in the form Ap = 0:

Equation (10) shows that each correspondence supplies two homogeneous linear equations in 12 unknowns. At least 6 correspondences are necessary to solve for the entries of P. We also need our 3D points to be non-coplanar. With 252 correspondences from 7 planes, we are safe.

After execution of the calibration program, we can verify that the projection matrices correctly project the known 3D points back to their undistorted pixel coordinates.

In Figure 5, the blue dots are the points found by the intersection detector, after compensating for the radial distortion. The yellow circles are the projections of the 3D points in the image. We can see that the projection matrices of both cameras do a good job. Note that the excentric annotated points do not coincide with the checkerboard intersections, due to the radial distortion compensation.

3D Tracking

We can now use our calibrated stereo system to compute the 3D location of a feature point. To demonstrate that, we’ll track an easy-to-detect feature point (the center of a red square) in a series of images.

You can find the tracking program here.

Figure 6 shows an example of the detection of the tracked red square. In a few words, the square is tracked by first identifying the regions of the images where the blue component is dominant since the area around the red square is blue. A region where the red component dominates, within the blue-dominated area, is then found. For the details, please refer to the code.

Using the undistorted pixel coordinates from both cameras, and their corresponding projection matrices, the 3D location of the feature point can be computed for each image in the series, as displayed in the animation above.

Conclusion

We built a simple stereo vision system with a pair of webcams. We calibrated both cameras by compensating for their radial distortion and computing their projection matrices. We could use our calibrated system to track the 3D location of a feature point in a series of images.

Please feel free to experiment with the code.

If you have an application of stereo vision in mind, let me know, I would be very interested to hear about it!

Stereo Vision System for 3D Tracking was originally published in Towards Data Science on Medium, where people are continuing the conversation by highlighting and responding to this story.

...

800+ IT

News

als RSS Feed abonnieren

800+ IT

News

als RSS Feed abonnieren