📚 Speaking Probes: Self-Interpreting Models?

💡 Newskategorie: AI Nachrichten

🔗 Quelle: towardsdatascience.com

Can language models aid in their interpretation?

In this post, I experiment with the idea that language models can be coaxed to explain vectors coming from their parameters. It turns out to work better than you might expect, but still much work needs to be done.

As is customary in scientific papers, I use “we” instead of “I” (among other reasons, because it makes the text sound a bit less self-centered..).

This is not really a complete work, but more like a preliminary report on an idea I believe might be useful, and should be shared. As a result, I only carried out basic experiments to sanity-check the method. I hope other researchers would take up the work from where I started, and see if the limitations of the methods I suggest are surmountable. This work is aimed at professionals, but anyone with decent knowledge of transformers should be comfortable reading it.

Introduction

In recent years, many interpretability methods have been developed in Natural Language Processing [Kadar et al., 2017; Na et al., 2019; Geva et al., 2020; Dar et al., 2022]. In parallel, strong language models have taken the field by storm [Devlin et al., 2018; Liu et al., 2019; Radford et al., 2019; Brown et al., 2020], exhibiting unexpected emergent capabilities [Brown et al., 2020; Hendrycks et al., 2021; Wei et al., 2022]. One may wonder if strong language skills allow language models to communicate about their inner state. This work is a brief report on our explorations of this conjecture. In this work, we will design natural language prompts and inject model parameters as virtual tokens in the input. The prompts are designed to instruct the model to explain words — but instead of a real word they are given a virtual token representing a model parameter. The model then generates a sequence continuing the prompt. We will observe this technique’s ability to explain model parameters which we have existing explanations for. We call the new technique “speaking probes”. We will also discuss on a high-level possible justifications for why one might expect the method to work.

Interpretability researchers are encouraged to use speaking probes as a tool to guide their analysis. We do not suggest relying on their answers indiscriminately, as they are not sufficiently grounded. However, they have the important advantage of possessing the expressive power of natural language. Our queries are out of distribution for the model in the zero-shot case as it was only trained with real tokens. However, we hypothesize its inherent skills at manipulating its representations will make it easy to learn the new task.

We provide the following two resources for researchers interested in exploring this technique for themselves:

GitHub - guyd1995/speaking-probes

- Demo (🤗 HuggingFace): https://huggingface.co/spaces/guy-tau/speaking-probes — This can be slow, so apart from basic experimentation, it is better to open one of the notebooks in the repository on Colab

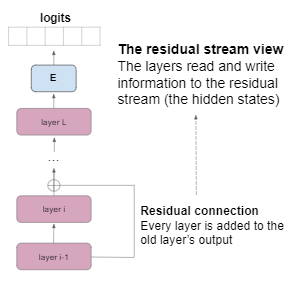

Background: The Residual Stream

This has been explained in more detail in the background section of my previous post: Analyzing Transformers in Embedding Space — Explained

We rely on a useful view of the transformer through its residual connections originally introduced in nostalgebraist [2020]. Specifically, each layer takes a hidden state as input and adds information to the hidden state through its residual connection. Under this view, the hidden state is a residual stream passed along the layers, from which information is read, and to which information is written at each layer. Elhage et al. [2021] and Geva et al. [2022b] observed that the residual stream is often barely updated in the last layers, and thus the final prediction is determined in early layers and the hidden state is mostly passed through the later layers. An exciting consequence of the residual stream view is that we can project hidden states in every layer into embedding space by multiplying the hidden state with the embedding matrix E, treating the hidden state as if it were the output of the last layer. Geva et al. [2022a] used this approach to interpret the prediction of transformer-based language models, and we follow a similar approach.

Showcasing Speaking Probes

Overview

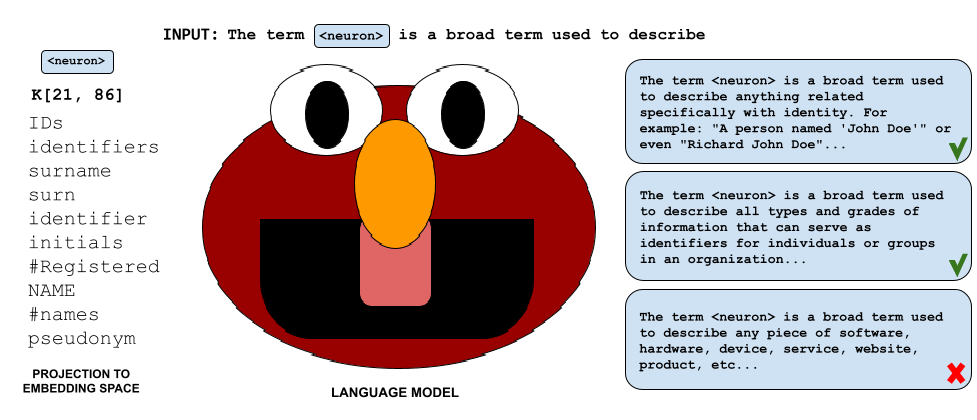

Our intuition builds on the residual stream view. In the residual stream view, parameters of the models are added to the hidden state on a more or less equal footing with token embeddings. More generally, the residual view hints that there’s a good case for considering parameter vectors, hidden states, and token embeddings to be using the same “language”. “Syntactically”, we can use any continuous representation — be it a parameter vector or hidden state — as a virtual token. We will use the term “neuron” interchangeably with “virtual token”.

We will focus on parameters in this article, as hidden states seem to be more complicated to analyze — which stands to reason since they are mixtures of parameters. We show that parameter vectors can be used alongside token embeddings in the input prompt and produce meaningful responses. We hypothesize a neuron eventually collapses into a token that is related to the concepts it encodes.

Our goal is to use the strong communication skills language models possess for expressing their latent knowledge. We suggest a few prompts in which the model is requested to explain a word. Instead of a word, it is given a virtual token representing a vector in the parameters. We represent the virtual token in the prompt by the label <neuron> (when running the model, its token embedding is simply replaced with the neuron we want to interpret). We then generate the continuation of the prompt, which is the language model’s response.

Prompts

The term "<neuron>" means

- Synonyms of small: small, little, tiny, not big

- Synonyms of clever: clever, smart, intelligent, wise

- Synonyms of USA: USA, US, United States

- Synonyms of Paris: Paris, city of lights, city of love, capital of

France

- Synonyms of error: error, mistake, fallacy, wrong

- Synonyms of <neuron>:

The term <neuron> is a broad term related to

From Wikipedia: "<neuron> is

More examples are available in the repository under prompts/

Method

We feed a prompt into the model and generate the continuation of the text with <neuron>’s “token embedding” being the neuron we want to interpret. To produce diverse outputs, we generate with sampling and not just greedy decoding. We will see a few examples below.

In this work, we will focus on feedforward (FF) keys (the first layer of the feed-forward sublayer), as they seem somewhat easier to interpret than FF values (the second layer). Each layer l has a matrix K_l (do NOT confuse with attention keys) — each of its columns can be considered interpreted individually.

To test our method, we use models we already have a good idea of what they mean in embedding space. Obviously, these are the easiest cases we can consider — so these experiments are just a sanity check. For syntactic sugar, we use <param_i_j> for a neuron representing the j-th FF key in the i-th layer. All the examples below are from GPT-2 medium. The generation hyperparameters we use are:

temperature=0.5

repetition_penalty=2.

do_sample=True

max_new_tokens=50

min_length=1

Examples: Comparison With Embedding Space Projection

Japanese Names

When projected to the embedding space <param_10_8> seems to relate to Japanese names and generally speaking terms associated with Japan (* as far as I can tell):

'Miy', '#imaru', 'Gong', 'Jinn', 'Xia', 'Makoto', 'Kuro',

'Shin', '#Tai', 'Yamato', 'Tai', 'Ichigo', '#Shin', '#atsu',

'Haku', 'Chun','#ku', 'Qing', 'Tsuk', 'Hua', 'Jiang', 'Nanto',

'manga', 'Yosh', 'yen', 'Osaka', 'Qian', '#uku', '#iku', 'Yue',

'Choi', '#iru', 'Hai', '#uchi', '#Japan', 'Kyoto', '#kun',

'#ikan', '#ugu', 'Tsu'

Let’s see what the new method gives us:

The term "<param_10_8>" means

generate: The term " <param_10_8>" means the god of light.

It is said that a man named Kamiyama was born in this world, and thus

he became known as Kaname (風). The name for him comes from his

appearance while wearing an animal's skin

~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~

generate: The term " <param_10_8>" means the spirit of a person, and

it is used to describe something like emotion.

In other words there are two types: 「Hō」 (Emotion) or 「Ikuoi''(Spirit).

The first type can be described as

~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~

generate: The term " <param_10_8>" means a person of Japanese descent.

In the original, this is said to be an English word

meaning 'a man with red hair'. However in later versions it's used as

a derogatory reference towards people who speak poorly or do not

understand their own language

Discussion: while it hasn't given us a concise answer, it is not hard to deduce from the above samples that the term is indeed related to Japan and Japanese. As you can see, the model is not very truthful, even without the neuron being involved, spouting strange assertions like in the last sample.

Progress

<param_11_2> seems to be related to the idea of progress. If we look at it in the embedding space, we get:

'progressing', '#Progress', '#progress', '#osponsors', '#oppable',

'advancement', 'progress', 'Progress', '#senal', '#venge', 'queue',

'#pun', 'progression', '#wagon', 'advancing', '#cknowled', '#Goal',

'momentum', '#zag', '#hop', 'pursuits', '#encing', '#Improve',

'STEP', '#chini', 'standings'

Let’s see if we can get this from the new method:

The term "<param_11_2>" means

generate: The term " <param_11_2>" means to move forward.

If you're doing it right, your goal is not necessarily the same

as yours or others' goals. You may be able for a while (say 7-10

years) at least achieve some of what they are achieving in

~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~

generate: The term " <param_11_2>" means to move forward into

the future. If you want a list of possible things that could

happen in your life, this is what it looks like:

I have no idea how much I've learned or accomplished

since then (but if anything has changed for

~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~

generate: The term " <param_11_2>" means to make a substantial

advance towards attaining the object of an undertaking.

<!--end--> _______________________________________________

Sent through by email, sent from archive@gmane-online:...

Discussion: we can consider this one quite good. As the model was trained on data from the internet, some answers might replicate the format of web discussions. Altogether, this parameter seems easy for the speaking probe to interpret.

Words that start with G

<param_0_0> appears to relate to words that begin with the letter “g”. In the embedding space, we see:

'#gd', '#GF', '#G', 'GN', '#GR', '#gat', 'Goff',

'#GW', 'g', '#GV', 'GS', 'Gw', '#GS', '#GBT',

'#gm', '#gp', 'Gork', 'Gow', '#GD', '#GP', '#gal',

'Gad', '#GC', '#g', 'GD', 'G', 'GA', '#gel', '#GG',

'Gemini', 'Gard', 'GR', '#Gs', '#gom', '#gran', 'gad',

'#gr', '#GM', '#GA', '#ga'

Let’s see what happens with speaking probes:

The term "<param_0_0>" means

generate: The term " <param_0_0>" means a character from the series.

(I'm not sure if this is an actual word, or just some sort of

translation error.)

A lot has been written about how much we love Doctor Who:

The Next Generation and what makes it such

~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~

generate: The term " <param_0_0>" means a person who is not of

Greek origin.

In the United States, there are many people with names that

begin in G and end at g (such as: John Doe). The name was

originally spelled 'John' but it became common to spell

~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~

generate: The term " <param_0_0>" means a small (6.5mm) hole in

the glass, which is formed when water flows through it and

enters into an open space inside the lens of your camera. The

image on this page was taken with my Olympus OM-D E M 50

- synonyms of small: small, little, tiny, not big

- synonyms of USA: USA, US, United States

- synonyms of Paris: Paris, city of lights, city of love, capital of France

- synonyms of error: error, mistake, fallacy, wrong

- synonyms of clever: clever, smart, intelligent, wise

- synonyms of <param_0_0>:

generate: - synonyms of small: small, little, tiny, not big

- synonyms of USA: USA, US, United States

- synonyms of Paris: Paris, city of lights, city of love, capital of France

- synonyms of error: error, mistake, fallacy, wrong

- synonyms of clever: clever, smart, intelligent, wise

- synonyms of <param_0_0>: G, man (male), woman(female)

~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~

generate: - synonyms of small: small, little, tiny, not big

- synonyms of USA: USA, US, United States

- synonyms of Paris: Paris, city of lights, city of love, capital of France

- synonyms of error: error, mistake, fallacy, wrong

- synonyms of clever: clever, smart, intelligent, wise

- synonyms of <param_0_0>: Gagarin rocket/rocket scientist

(from the Russian "Gigafar")

~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~

generate: - synonyms of small: small, little, tiny, not big

- synonyms of USA: USA, US, United States

- synonyms of Paris: Paris, city of lights, city of love, capital of France

- synonyms of error: error, mistake, fallacy, wrong

- synonyms of clever: clever, smart, intelligent, wise

- synonyms of <param_0_0>: gg, go

Discussion: with the first prompt, the probe seems to be very unrelated to the embedding space interpretation of the parameter. The only hint we get in the right direction is “In the United States, there are many people with names that begin in G and end at g…” from the second sample. However, it is said in an indirect manner, and not as an answer to the question.

This is a good place to demonstrate the flexibility of speaking probes, as they let you reformulate the question and maybe get better results. One can arguably say that the second prompt is much better suited to the problem. While it is not too truthful, an overview of the responses can hint at the right direction — one sample responds “G” and then gives a few unrelated words. Another one replies with “gg, go”. While it’s not clear-cut, it can help to begin exploring. It is also generally advisable to work with more samples.

Identification

The parameter <param_21_86> is related to the following terms:

'IDs', 'identifiers', 'surname', 'surn', 'identifier', 'initials',

'#Registered', 'NAME', '#names', 'pseudonym', '#codes', 'nomine',

'names', 'username', '#IDs', 'ID', 'registration', '#76561',

'#soDeliveryDate', '#ADRA', 'CLSID', 'numbering', '#ername',

'#address', 'addresses', 'codes', '#Names', 'regist', 'name',

'Names', '#Address'

It seems to encompass terms related to unique identification.

Compare with the probe:

The term "<param_21_86>" means

generate: The term " <param_21_86>" means a unique identifier

for the person and/or vehicle of which an identification card

is issued by any government or agency.

(e) The name, address(s), dateof birth: State; Date that this

registration was last renewed ; Vehicle serial

~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~

generate: The term " <param_21_86>" means a unique identifier

associated with an individual or entity.

<!--iframe-->

You can find more information about the new rules in this

article: http://www,copswatchdogusa-news/20120115-s

~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~

generate: The term " <param_21_86>" means the unique identifier

assigned to a particular person or entity by law.

(2) The terms and conditions for registration of an account

may be changed from time-to -time without notice, at any

reasonable request made under section 14(3).

Synthetic Examples

It is also possible to create neurons that we know what they “should” mean. For example, we take the average of the embeddings of two tokens and see if the model can reconstruct them with an appropriate prompt. This helps us debug the method. We can gauge its reaction to different variants of neurons and improve prompts based on them for the actual neurons we care about.

In the following example, we add together the token embedding of the “children” and “dog”. Then we apply a speaking probe to it. The probe can do either of the following:

- it might collapse into one word deterministically,

- it might collapse into either of the words randomly,

- it might adopt a meaning that is semantically in the middle between the two (for example, “puppies”?)

- any other behavior

We can use this neuron as a toy example of the way the probe treats such polysemous neurons. Remember, however, the behavior may differ depending on the word.

generate: The term " <neuron>" means a person under the age of 18 years.

The terms are used in this chapter as follows:

(1) A child shall be considered to have attained legal maturity when he

or she has reached an understanding with his parent, guardian and other

persons that

~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~

generate: The term " <neuron>" means any animal that is capable of

walking on two legs, but not a dog.

The phrase 'the children' refers to those who were born before

the adoption law was changed in 1996 and are now considered as age 18

or 19 years old

~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~

generate: The term " <neuron>" means any member of the genus

Canis familiarus, but does not include chimpanzees or gorillas.

(2) (a)(i), in paragraph 1, is used to mean a dog that has been

domesticated by human beings and whose natural

Interestingly, the probe seems to handle the ambiguity in the neuron moderately. The samples seem to refer to one token, but then they might get confused and talk about the other. Here’s another prompt we can try:

The invented word ' <neuron>' is a combination of the words

generate: The invented word'<neuron>' is a combination of the words

dog and child, which are derived from Latin. It was coined by

anthropologist Jules Verne in his book "A Short History" (1877).

The term became popularized as an adjective after it appeared on many

advertisements for products such to

~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~

generate: The invented word'<neuron>' is a combination of the words

dachshund and dog. A person who has no regard for their own life,

but only to what they see around them; one with an insatiable

appetite without restraint or control on it's actions - this kind

can be dangerous in

~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~

generate: The invented word'<neuron>' is a combination of the

words dog and child. The original meaning was that children

could be used to teach, but later this became associated with dogs

rather than people as in "dog" means man". We don't know why it's

called an animal instead; maybe because they

Let’s see what happens when we combine some more challenging tokens: “google” and “show”:

The invented word ' <neuron>' is a combination of the words

generate: The invented word'<neuron>' is a combination of the words

google and gmail. This term was coined in 2006 by Google's Senior

Vice President, Eric Schmidt to describe how people use their email

accounts (Google+).

I have spent many hours trying on different web sites with Gmail for

various reasons: I

~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~

generate: The invented word'<neuron>' is a combination of the words

google and gopher, which mean both internet search engines. There are

two main types: Google-based sites that can be visited on your computer

(e.g., http://www!google) or from other web browsers like Firefox &

Opera

~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~

generate: The invented word'<neuron>' is a combination of the words

google and gmail. The first example I found was from 2002, but it has

been used since then by many people on various websites to describe

their web pages (see for instance this article).

If you like what we do, please consider supporting

It looks like we’ve run out of luck here. The word “show” seems to have been absorbed by the word “google”. Let’s try to see if we can mitigate this by setting a smaller coefficient to the token embedding of “google” — we multiply it by 0.9 and get:

The invented word ' <neuron>' is a combination of the words

generate: The invented word'<neuron>' is a combination of the words

show and giggle. A gag was coined in 1971 by British writer John Updike,

who wrote it to describe an episode where TV presenter Peter Jennings

tries his hardest not laugh at something he's seen before (see below).

Gag

~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~

generate: The invented word'<neuron>' is a combination of the words

show, tell and lie ('tell'). This can be used to describe several types:

It's not just for kids. It also applies here at Wikipedia!

~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~

generate: The invented word'<neuron>' is a combination of the words

show and giggle. In all probability, this was used to describe something

that had no physical connection with comedy at first sight:

Gretchen Rubin's new book The Giggling Brain has just been published

by Oxford University Press; it looks

Seems it can recover “show” and “giggle” as a distorted version of “google”.

Discussion

Potential of the Method

The distinctive features that we want to capitalize on with this method are:

- Natural language output: both an advantage and disadvantage, it makes the output harder to evaluate, but it provides much greater flexibility than other methods.

- Inherent ability to manipulate latent representations: we use the model’s own capabilities of manipulating its latent representations. We assume they share the same latent space with the model parameters due to the residual stream view. Other techniques need to be trained or adjusted in some other way to the model’s latent space in order to “understand” it. The model is capable of decoding its own states naturally, which can be useful for interpretation.

In general, there is not much research on continuous vectors as first-class citizens in transformers. While ideas like prompt tuning [Lester et al., 2021], and exciting ideas like Hao et al. [2022] pass continuous inputs to the model, they require training to work and they are not used zero-shot. A central motif in this work is the investigation of whether some continuous vectors can be used like natural tokens without further training — under the assumption that they use the same “language” as the model.

Another useful feature of this technique is that it uses the model more or less as a black box, without much technical work involved. It is easy to implement and understand. Casting interpretation as a generation problem, we can leverage literature on generation from mainstream NLP for future work. Similarly, hallucinations are a major concern in speaking probes, but we can hope to be able to apply mainstream research approaches in the future to this method.

In total, this is perhaps the most modular interpretability method — it does not rely on a specifically tailored algorithm, and it can adopt insights from other areas in NLP to improve, without losing breath. Also, it is easy to experiment with (even for less academically inclined practitioners) and the search space landscape is very different than with other methods.

Possible Future Directions

- Eloquent. Too Eloquent: language models are trained to produce eloquent explanations. Factuality is less worrisome to them. These eloquent explanations are not to be taken literally.

- Word Collapse: our examples seem to exhibit the phenomenon of “word collapse’’ — where neurons are internally converted to a specific word, rather than keeping their original state of superposition. An interesting research question is whether the superposition can be retained.

- Layer Homogeneity: in this article, we implicitly assume we can take parameters from different layers and they will react similarly to our prompts. It is possible that some layers are more amenable to use with speaking probes than others. We call this layer homogeneity. We need to be cautious in assuming that all layers can be treated the same with respect to our method. Other internal differences may play a role in the ability of the method to cope with different neurons.

- Neuron Polysemy: especially in face of word collapse, it seems that neurons that carry multiple unrelated interpretations will have to be sampled multiple times to account for all their different meanings. We would like to be able to extract the different meanings more faithfully and “in one sitting’’.

- Better Prompts: this is not the main part of our work, but many papers show the benefits of using carefully engineered prompts [e.g., Liu et al., 2021].

- Intrusive Probes: our method is using the language model as a black box, feeding inputs to the model. As discussed earlier with respect to layer homogeneity, it might be that seeing top-layer parameters in the input might not be “digestible’’ by the model. A solution can be using similar prompts to the ones used in speaking probes, but only injecting the neuron later in the computation in the relevant layer.

- Other Types of Concepts: we have mainly discussed neurons that represent a category or a concept in natural language. We know that language models can work with code, but we haven’t considered this type of knowledge in this article. Also, it is interesting to use speaking probes to locate facts in model parameters. Facts might require a number of parameters working in unison — so it will be interesting to locate them and find prompts that will be able to extract these facts.

- Outputting Neurons: we played with the input in this article. One can imagine a scenario where the model can also output neurons as tokens. We wonder what could be other possible use cases for models capable of outputting model parameters or other kinds of neurons.

- More Models: we considered GPT-2 medium in this work, for conciseness. Larger models might exhibit different behavior — for better or for worse. Encoder and encoder-decoder transformers might also require a slightly different treatment. We leave this for future work.

If you do follow-up work, please cite as:

@misc{speaking_probes,

url = {https://towardsdatascience.com/speaking-probes-self-interpreting-models-7a3dc6cb33d6},

note = {\url{https://towardsdatascience.com/speaking-probes-self-interpreting-models-7a3dc6cb33d6}},

title = {Speaking Probes: Self-interpreting Models?},

publisher = {Towards Data Science},

author = {Guy, Dar},

year = 2023

}You are also welcome to follow me on Twitter:

This is not a direct follow-up, but you might be also interested in my other blog post on a related paper I worked on with collaborators:

Analyzing Transformers in Embedding Space — Explained

Appendix: Some Related Work

Interpretability of neurons in NLP is a challenging task that has been tackled with multiple different methods [Sajjad et al., 2021]. Methods can either be used to interpret neurons or find the most relevant neurons per concept. We only considered the former in this work. Perhaps most similar to ours is Corpus Generation [Poerner et al., 2018] in that they don’t need a corpus and they generate textual output. Unlike our method, they use gradient ascent for optimization and their method does not produce eloquent explanations, but rather (not necessarily grammatical) patterns that maximally activate the neuron. Many methods rely on a corpus of inputs to feed into the model: [K´ad´ar et al., 2017; Na et al., 2019] extracted from the corpus pieces of text that activated the neuron the most and used it as the interpretation of the neuron. This, of course, requires a large corpus, and the results might be biased by the corpus at hand, which is often more specific than the corpus the model was trained on.

A recent line of work has focused on the input-independent interpretation of neurons [Geva et al., 2020; Dar et al., 2022]. They take neurons and project them to the embedding space. To visualize the neuron, they take the top most activated tokens in the neuron’s representation in embedding space. This method does not require any passes at all, but it reduces a neuron to a list of tokens. Tokens are often not the right granularity for interpretation. Here’s a brief overview of the mentioned methods:

- Finding Most Activated Sentences: perhaps the most natural approach to parameter interpretation is running the model on an entire corpus and looking at the sentences and specific tokens with the highest activation in this parameter. Some variants use 5-grams [K´ad´ar et al., 2017] or parsing trees [Na et al., 2019] to try to isolate the important parts of the sentence and avoid using the entire text.

- Corpus Generation: an optimization-based approach that was proposed by Poerner et al. [2018] generates a sequence that maximizes the parameter’s activation. A series of gradient ascent steps are applied on a matrix using the softmax Gumbel trick to allow for sampling and control for the smoothness of the distribution. We omit the exact details and leave the interested reader to read the original paper (only 3 pages).

- Projection to Embedding Space: recent works [Geva et al., 2020; Dar et al., 2022] have shown that the parameters of GPT-like architectures can be interpreted by projecting them into the embedding space by multiplying them by the embedding matrix E ∈ R^d × |V|. The columns of E are the token embeddings, so the method essentially finds the token embeddings with the highest dot-product with the parameter. We used this method as a reference in our work due to its efficiency and simplicity.

References

T. B. Brown, B. Mann, N. Ryder, M. Subbiah, J. Kaplan, P. Dhariwal, A. Neelakantan, P. Shyam, G. Sastry, A. Askell, S. Agarwal, A. Herbert-Voss, G. Krueger, T. Henighan, R. Child, A. Ramesh, D. M. Ziegler, J. Wu, C. Winter, C. Hesse, M. Chen, E. Sigler, M. Litwin, S. Gray, B. Chess, J. Clark, C. Berner, S. McCandlish, A. Radford, I. Sutskever, and D. Amodei. Language models are few-shot learners, 2020. URL https://arxiv.org/abs/2005.14165.

D. Dai, L. Dong, Y. Hao, Z. Sui, B. Chang, and F. Wei. Knowledge neurons in pretrained transformers, 2021. URL https://arxiv.org/abs/2104.08696.

G. Dar, M. Geva, A. Gupta, and J. Berant. Analyzing transformers in embedding space, 2022. URL https://arxiv.org/abs/2209.02535.

J. Devlin, M.-W. Chang, K. Lee, and K. Toutanova. Bert: Pre-training of deep bidirectional transformers for language understanding, 2018. URL https://arxiv.org/abs/1810.04805.

N. Elhage, N. Nanda, C. Olsson, T. Henighan, N. Joseph, B. Mann, A. Askell, Y. Bai, A. Chen, T. Conerly, N. DasSarma, D. Drain, D. Ganguli, Z. Hatfield-Dodds, D. Hernandez, A. Jones, J. Kernion, L. Lovitt, K. Ndousse, D. Amodei, T. Brown, J. Clark, J. Kaplan, S. McCandlish, and C. Olah. A mathematical framework for transformer circuits, 2021. URL https://transformer-circuits.pub/2021/framework/index.html.

M. Geva, R. Schuster, J. Berant, and O. Levy. Transformer feed-forward layers are key-value memories, 2020. URL https://arxiv.org/abs/2012.14913.

M. Geva, A. Caciularu, G. Dar, P. Roit, S. Sadde, M. Shlain, B. Tamir, and Y. Goldberg. Lm-debugger: An interactive tool for inspection and intervention in transformer-based language models. arXiv preprint arXiv:2204.12130, 2022a.

M. Geva, A. Caciularu, K. R. Wang, and Y. Goldberg. Transformer feed-forward layers build predictions by promoting concepts in the vocabulary space, 2022b. URL https://arxiv.org/abs/2203.14680.

Y. Hao, H. Song, L. Dong, S. Huang, Z. Chi, W. Wang, S. Ma, and F. Wei. Language models are general-purpose interfaces, 2022. URL https://arxiv.org/abs/2206.06336.

D. Hendrycks, C. Burns, S. Basart, A. Zou, M. Mazeika, D. Song, and J. Steinhardt. Measuring massive multitask language understanding. In International Conference on Learning Representations, 2021. URL https://openreview.net/forumid=d7KBjmI3GmQ.

A. Kadar, G. Chrupala, and A. Alishahi. Representation of Linguistic Form and Function in Recurrent Neural Networks. Computational Linguistics, 43(4):761–780, 12 2017. ISSN 0891–2017. doi: 10.1162/COLI a 00300. URL https://doi.org/10.1162/COLI_a_00300.

B. Lester, R. Al-Rfou, and N. Constant. The power of scale for parameter-efficient prompt tuning, 2021. URL https://arxiv.org/abs/2104.08691.

J. Liu, D. Shen, Y. Zhang, B. Dolan, L. Carin, and W. Chen. What makes good in-context examples for gpt-3? CoRR, abs/2101.06804, 2021. URL https://arxiv.org/abs/2101.06804.

Y. Liu, M. Ott, N. Goyal, J. Du, M. Joshi, D. Chen, O. Levy, M. Lewis, L. Zettlemoyer, and V. Stoyanov. Roberta: A robustly optimized bert pretraining approach, 2019. URL https://arxiv.org/abs/1907.11692.

S. Na, Y. J. Choe, D.-H. Lee, and G. Kim. Discovery of natural language concepts in individual units of cnns, 2019. URL https://arxiv.org/abs/1902.07249.

nostalgebraist. interpreting gpt: the logit lens, 2020. URL https://www.lesswrong.com/posts/AcKRB8wDpdaN6v6ru/interpreting-gpt-the-logit-lens.

N. Poerner, B. Roth, and H. Schutze. Interpretable textual neuron representations for NLP. In Proceedings of the 2018 EMNLP Workshop BlackboxNLP: Analyzing and Interpreting Neural Networks for NLP, pages 325–327, Brussels, Belgium, Nov. 2018. Association for Computational Linguistics. doi: 10.18653/v1/W18–5437. URL https://aclanthology.org/W18–5437.

A. Radford, J. Wu, R. Child, D. Luan, D. Amodei, and I. Sutskever. Language models are unsupervised multitask learners. In OpenAI blog, 2019.

H. Sajjad, N. Durrani, and F. Dalvi. Neuron-level interpretation of deep nlp models: A survey. 2021. doi: 10.48550/ARXIV.2108.13138. URL https://arxiv.org/abs/2108.13138.

J. Wei, X. Wang, D. Schuurmans, M. Bosma, B. Ichter, F. Xia, E. Chi, Q. Le, and D. Zhou. Chain of thought prompting elicits reasoning in large language models, 2022. URL https://arxiv.org/abs/2201.11903.

Speaking Probes: Self-Interpreting Models? was originally published in Towards Data Science on Medium, where people are continuing the conversation by highlighting and responding to this story.

...

800+ IT

News

als RSS Feed abonnieren

800+ IT

News

als RSS Feed abonnieren