📚 Using GPT-3.5-Turbo and GPT-4 to Apply Text-defined Data Quality Checks on Humanitarian Datasets

💡 Newskategorie: AI Nachrichten

🔗 Quelle: towardsdatascience.com

Using GPT-3.5-Turbo and GPT-4 for Predicting Humanitarian Data Categories

TL;DR

In this article, I explore using GPT-3.5-Turbo and GPT-4 to categorize datasets without the need for labeled data or model training, by prompting the model with data excerpts and category definitions. Using a small sample of categorized ‘Data Grid’ datasets found on the amazing Humanitarian Data Exchange (HDX), zero-shot prompting of GPT-4 resulted in 96% accuracy when predicting category and 89% accuracy when predicting both category and sub-category. GPT-4 outperformed GPT-3.5-turbo for the same prompts, with 96% accuracy versus 66% for category. Especially useful was that the model could provide reasoning for its predictions which helped to identify improvements to the process. This is just a quick analysis involving a small number of records due to cost limitations, but it shows some promise for using Large Language Models for data quality checks and summarization. Limitations exist due to the maximum number of tokens allowed in prompts affecting the amount of data that can be included in data excerpts, as well as performance and cost challenges — especially if you’re a small non-profit! — at this early stage of commercial generative AI.

The Humanitarian Data Exchange (HDX) platform has a great feature called the HDX Data Grid which provides an overview of high-quality data coverage in six key crisis categories by country, see here for an example for Chad. The datasets which make it into the grid undergo a series of rigorous tests by the HDX team to determine coverage and quality, the first of which is to determine if the dataset is in an approved category.

I wondered if perhaps Large Language Models (LLMs) might be an efficient way to apply data quality and classification rules in situations where there might not be any labeled training data. It would also be convenient to provide rules in a human-readable text form that non-technical teams could easily maintain, and use these directly in order to eliminate the requirement for features engineering and model management.

Oh, and I also recently got early access to GPT-4 and wanted to take it for a bit of a spin! 🙂 … So decided to also do some analysis comparing performance with GPT-3.5-Turbo.

Is the Dataset in an Approved Category?

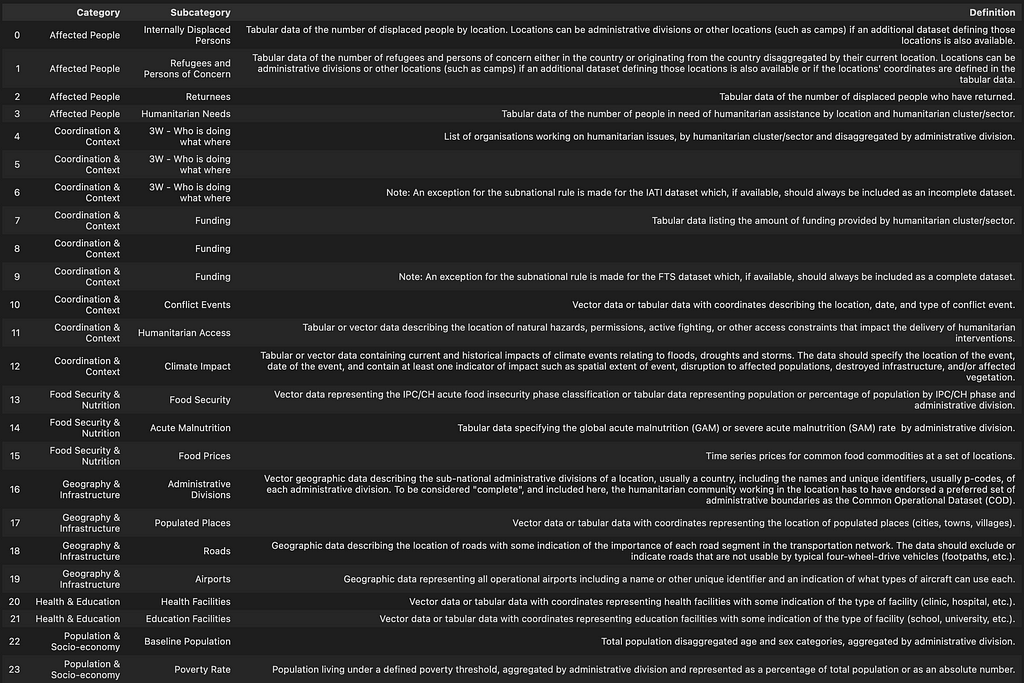

Looking at The State of Humanitarian Data 2023 Annex B, which outlines the criteria and categories used when assessing if data is of sufficient quality and coverage …

The first step in determining whether a dataset should be included in a Data Grid is to check whether the dataset meets the thematic requirement defined in Annex A. Datasets that are not considered relevant are automatically excluded.

The categories in Annex A are …

We could write a classifier to assign these categories to our datasets based on the data they contain, but we only know categories for the subset of datasets that were approved for HDX Data Grid. If prompting alone can classify our data without having to manually label it, that would be fantastic. This is a zero-shot task[2], one of the amazing properties of Large Language Models where classification can occur without training specifically for the task or providing examples.

Predicting Dataset Category for a Single Table

Let’s read the categories data and use it to generate prompt text defining each one …

hdx_data_categories_file = './data/Data Completeness Definitions - version_1.csv'

dg_categories = pd.read_csv(hdx_data_categories_file)

dg_categories = dg_categories[

["Category", "Subcategory", "Definition", "Datagrid recipe category"]

]

dg_categories["prompt_text"] = dg_categories.apply(

lambda x: f"- Category '{x['Category']} : {x['Subcategory']}' is defined as: {x['Definition']}",

axis=1,

)

category_prompt_text = dg_categories["prompt_text"].to_string(index=False, header=False)

display(category_prompt_text)

Which gives …

- Category \'Affected People : Internally Displaced Persons\' is defined as: Tabular data of the number of displaced people by location. Locations can be administrative divisions or other locations (such as camps) if an additional dataset defining those locations is also available.\n

- Category \'Affected People : Refugees and Persons of Concern\' is defined as: Tabular data of the number of refugees and persons of concern either in the country or originating from the country disaggregated by their current location. Locations can be administrative divisions or other locations (such as camps) if an additional dataset defining those locations is also available or if the locations\' coordinates are defined in the tabular data.\n

- Category \'Affected People : Returnees\' is defined as: Tabular data of the number of displaced people who have returned.\n

- Category \'Affected People : Humanitarian Needs\' is defined as: Tabular data of the number of people in need of humanitarian assistance by location and humanitarian cluster/sector.\n

- Category \'Coordination & Context : 3W - Who is doing what where\' is defined as: List of organisations working on humanitarian issues, by humanitarian cluster/sector and disaggregated by administrative division.\n

- Category \'Coordination & Context : 3W - Who is doing what where\' is defined as: \n

- Category \'Coordination & Context : 3W - Who is doing what where\' is defined as: Note: An exception for the subnational rule is made for the IATI dataset which, if available, should always be included as an incomplete dataset.\n

- Category \'Coordination & Context : Funding\' is defined as: Tabular data listing the amount of funding provided by humanitarian cluster/sector.\n

- Category \'Coordination & Context : Funding\' is defined as: \n

- Category \'Coordination & Context : Funding\' is defined as: Note: An exception for the subnational rule is made for the FTS dataset which, if available, should always be included as a complete dataset.\n

- Category \'Coordination & Context : Conflict Events\' is defined as: Vector data or tabular data with coordinates describing the location, date, and type of conflict event.\n

- Category \'Coordination & Context : Humanitarian Access\' is defined as: Tabular or vector data describing the location of natural hazards, permissions, active fighting, or other access constraints that impact the delivery of humanitarian interventions.\n

- Category \'Coordination & Context : Climate Impact\' is defined as: Tabular or vector data containing current and historical impacts of climate events relating to floods, droughts and storms. The data should specify the location of the event, date of the event, and contain at least one indicator of impact such as spatial extent of event, disruption to affected populations, destroyed infrastructure, and/or affected vegetation.\n

- Category \'Food Security & Nutrition : Food Security\' is defined as: Vector data representing the IPC/CH acute food insecurity phase classification or tabular data representing population or percentage of population by IPC/CH phase and administrative division.\n

- Category \'Food Security & Nutrition : Acute Malnutrition\' is defined as: Tabular data specifying the global acute malnutrition (GAM) or severe acute malnutrition (SAM) rate\xa0 by administrative division.\n

- Category \'Food Security & Nutrition : Food Prices\' is defined as: Time series prices for common food commodities at a set of locations.\n- Category \'Geography & Infrastructure : Administrative Divisions\' is defined as: Vector geographic data describing the sub-national administrative divisions of a location, usually a country, including the names and unique identifiers, usually p-codes, of each administrative division. To be considered "complete", and included here, the humanitarian community working in the location has to have endorsed a preferred set of administrative boundaries as the Common Operational Dataset (COD).\n

- Category \'Geography & Infrastructure : Populated Places\' is defined as: Vector data or tabular data with coordinates representing the location of populated places (cities, towns, villages).\n

- Category \'Geography & Infrastructure : Roads\' is defined as: Geographic data describing the location of roads with some indication of the importance of each road segment in the transportation network. The data should exclude or indicate roads that are not usable by typical four-wheel-drive vehicles (footpaths, etc.).\n

- Category \'Geography & Infrastructure : Airports\' is defined as: Geographic data representing all operational airports including a name or other unique identifier and an indication of what types of aircraft can use each.\n

- Category \'Health & Education : Health Facilities\' is defined as: Vector data or tabular data with coordinates representing health facilities with some indication of the type of facility (clinic, hospital, etc.).\n

- Category \'Health & Education : Education Facilities\' is defined as: Vector data or tabular data with coordinates representing education facilities with some indication of the type of facility (school, university, etc.).\n

- Category \'Population & Socio-economy : Baseline Population\' is defined as: Total population disaggregated age and sex categories, aggregated by administrative division.\n

- Category \'Population & Socio-economy : Poverty Rate\' is defined as: Population living under a defined poverty threshold, aggregated by administrative division and represented as a percentage of total population or as an absolute number.'

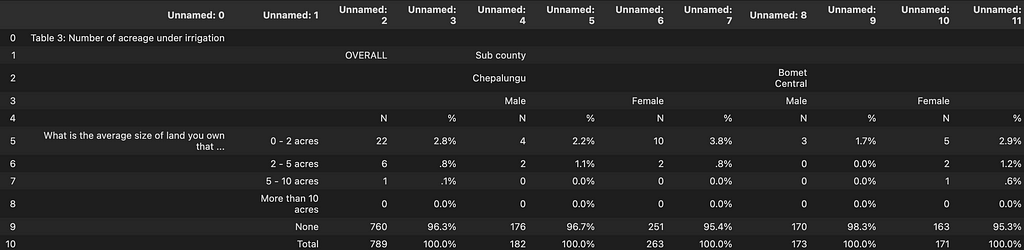

Here is a test file related to agriculture which is an unsupported category and which doesn’t appear on HDX’s Data Grid …

filename = "./data/number-of-acreage-under-irrigation.xlsx"

df = pd.read_excel(filename, sheet_name="Sheet1")

df = df.fillna("")

display(df)

In the above, I have intentionally avoided parsing the table to tidy things up (see here for more on that). Instead, we’ll throw the raw table at GPT to see how it performs.

Represented as a CSV string for the prompt, the table looks like this …

csv_as_str = df[0:20].to_csv(index=False)

print(csv_as_str)

Unnamed: 0,Unnamed: 1,Unnamed: 2,Unnamed: 3,Unnamed: 4,Unnamed: 5,Unnamed: 6,Unnamed: 7,Unnamed: 8,Unnamed: 9,Unnamed: 10,Unnamed: 11

Table 3: Number of acreage under irrigation,,,,,,,,,,,

,,OVERALL,,Sub county,,,,,,,

,,,,Chepalungu,,,,Bomet Central,,,

,,,,Male,,Female,,Male,,Female,

,,N,%,N,%,N,%,N,%,N,%

What is the average size of land you own that is currently under irrigation?,0 - 2 acres,22,2.8%,4,2.2%,10,3.8%,3,1.7%,5,2.9%

,2 - 5 acres,6,.8%,2,1.1%,2,.8%,0,0.0%,2,1.2%

,5 - 10 acres,1,.1%,0,0.0%,0,0.0%,0,0.0%,1,.6%

,More than 10 acres,0,0.0%,0,0.0%,0,0.0%,0,0.0%,0,0.0%

,None,760,96.3%,176,96.7%,251,95.4%,170,98.3%,163,95.3%

,Total,789,100.0%,182,100.0%,263,100.0%,173,100.0%,171,100.0%

For the prompt, we’ll combine category definitions into one chat prompt, and some instructions and the table being analyzed into a second …

prompts = []

prompts.append(f"Here is a list of HDX data categories with their definition: \n\n {category_prompt_text} \n\n")

prompts.append(f"Does the following table from file {filename} fall into one of the categories provided, if not say no. "\

f"If it does, which category and explain why? \n\n {csv_as_str} \n\n")

So prompt 1 …

Here is a list of HDX data categories with their definition:

- Category \'Affected People : Internally Displaced Persons\' is defined as: Tabular data of the number of displaced people by location. Locations can be administrative divisions or other locations (such as camps) if an additional dataset defining those locations is also available.\n

- Category \'Affected People : Refugees and Persons of Concern\' is defined as: Tabular data of the number of refugees and persons of concern either in the country or originating from the country disaggregated by their current location. Locations can be administrative divisions or other locations (such as camps) if an additional dataset defining those locations is also available or if the locations\' coordinates are defined in the tabular data.\n

- Category \'Affected People : Returnees\' is defined as: Tabular data of the number of displaced people who have returned.\n

- Category \'Affected People : Humanitarian Needs\' is defined as: Tabular data of the number of people in need of humanitarian assistance by location and humanitarian cluster/sector.\n

- Category \'Coordination & Context : 3W - Who is doing what where\' is defined as: List of organisations working on humanitarian issues, by humanitarian cluster/sector and disaggregated by administrative division.\n

- Category \'Coordination & Context : 3W - Who is doing what where\' is defined as: \n

- Category \'Coordination & Context : 3W - Who is doing what where\' is defined as: Note: An exception for the subnational rule is made for the IATI dataset which, if available, should always be included as an incomplete dataset.\n

- Category \'Coordination & Context : Funding\' is defined as: Tabular data listing the amount of funding provided by humanitarian cluster/sector.\n

- Category \'Coordination & Context : Funding\' is defined as: \n

- Category \'Coordination & Context : Funding\' is defined as: Note: An exception for the subnational rule is made for the FTS dataset which, if available, should always be included as a complete dataset.\n

- Category \'Coordination & Context : Conflict Events\' is defined as: Vector data or tabular data with coordinates describing the location, date, and type of conflict event.\n

- Category \'Coordination & Context : Humanitarian Access\' is defined as: Tabular or vector data describing the location of natural hazards, permissions, active fighting, or other access constraints that impact the delivery of humanitarian interventions.\n

- Category \'Coordination & Context : Climate Impact\' is defined as: Tabular or vector data containing current and historical impacts of climate events relating to floods, droughts and storms. The data should specify the location of the event, date of the event, and contain at least one indicator of impact such as spatial extent of event, disruption to affected populations, destroyed infrastructure, and/or affected vegetation.\n

- Category \'Food Security & Nutrition : Food Security\' is defined as: Vector data representing the IPC/CH acute food insecurity phase classification or tabular data representing population or percentage of population by IPC/CH phase and administrative division.\n

- Category \'Food Security & Nutrition : Acute Malnutrition\' is defined as: Tabular data specifying the global acute malnutrition (GAM) or severe acute malnutrition (SAM) rate\xa0 by administrative division.\n

- Category \'Food Security & Nutrition : Food Prices\' is defined as: Time series prices for common food commodities at a set of locations.\n- Category \'Geography & Infrastructure : Administrative Divisions\' is defined as: Vector geographic data describing the sub-national administrative divisions of a location, usually a country, including the names and unique identifiers, usually p-codes, of each administrative division. To be considered "complete", and included here, the humanitarian community working in the location has to have endorsed a preferred set of administrative boundaries as the Common Operational Dataset (COD).\n

- Category \'Geography & Infrastructure : Populated Places\' is defined as: Vector data or tabular data with coordinates representing the location of populated places (cities, towns, villages).\n

- Category \'Geography & Infrastructure : Roads\' is defined as: Geographic data describing the location of roads with some indication of the importance of each road segment in the transportation network. The data should exclude or indicate roads that are not usable by typical four-wheel-drive vehicles (footpaths, etc.).\n

- Category \'Geography & Infrastructure : Airports\' is defined as: Geographic data representing all operational airports including a name or other unique identifier and an indication of what types of aircraft can use each.\n

- Category \'Health & Education : Health Facilities\' is defined as: Vector data or tabular data with coordinates representing health facilities with some indication of the type of facility (clinic, hospital, etc.).\n

- Category \'Health & Education : Education Facilities\' is defined as: Vector data or tabular data with coordinates representing education facilities with some indication of the type of facility (school, university, etc.).\n

- Category \'Population & Socio-economy : Baseline Population\' is defined as: Total population disaggregated age and sex categories, aggregated by administrative division.\n

- Category \'Population & Socio-economy : Poverty Rate\' is defined as: Population living under a defined poverty threshold, aggregated by administrative division and represented as a percentage of total population or as an absolute number.'

And prompt 2 …

Does the following table from file ./data/number-of-acreage-under-irrigation.xlsx fall into one of the categories provided, if not say no. If it does, which category and explain why?

Unnamed: 0,Unnamed: 1,Unnamed: 2,Unnamed: 3,Unnamed: 4,Unnamed: 5,Unnamed: 6,Unnamed: 7,Unnamed: 8,Unnamed: 9,Unnamed: 10,Unnamed: 11\nTable 3: Number of acreage under irrigation,,,,,,,,,,,

,,OVERALL,,Sub county,,,,,,,

,,,,Chepalungu,,,,Bomet Central,,,

,,,,Male,,Female,,Male,,Female,

,,N,%,N,%,N,%,N,%,N,%

What is the average size of land you own that is currently under irrigation?,0 - 2 acres,22,2.8%,4,2.2%,10,3.8%,3,1.7%,5,2.9%

,2 - 5 acres,6,.8%,2,1.1%,2,.8%,0,0.0%,2,1.2%

,5 - 10 acres,1,.1%,0,0.0%,0,0.0%,0,0.0%,1,.6%

,More than 10 acres,0,0.0%,0,0.0%,0,0.0%,0,0.0%,0,0.0%

,None,760,96.3%,176,96.7%,251,95.4%,170,98.3%,163,95.3%

,Total,789,100.0%,182,100.0%,263,100.0%,173,100.0%,171,100.0%

Let’s try this with both GPT-3.5-turbo and GPT-4 …

def prompt_model(prompts, temperature=0.0, model="gpt-4"):

messages = [{"role": "system", "content": "You are a helpful assistant."}]

for prompt in prompts:

messages.append({"role": "user", "content": prompt})

response = ai.ChatCompletion.create(

model=model, temperature=temperature, messages=messages

)

return response["choices"][0]["message"]["content"]

prompts = []

prompts.append(

f"Here is a list of HDX data categories with their definition: \n\n {category_prompt_text} \n\n"

)

prompts.append(

f"Does the following table from file {filename} fall into one of the categories provided, if not say no. "

f"If it does, which category and explain why? \n\n {csv_as_str} \n\n"

)

for model in ["gpt-3.5-turbo", "gpt-4"]:

response = prompt_model(prompts, temperature=0.0, model=model)

print(f"\n{model} Model response: \n\n{response}")

We get …

gpt-3.5-turbo Model response:

No, the table does not fall into any of the categories provided.

The table is about the number and percentage of people who own land of

different sizes that are currently under irrigation.

It does not provide any information about the number of affected people,

coordination and context, food security and nutrition, geography and

infrastructure, health and education, or population and socio-economy.

gpt-4 Model response:

The table from the file ./data/number-of-acreage-under-irrigation.xlsx

does not fall into any of the provided HDX data categories.

The table provides information about the number of acreage under

irrigation in different sub-counties and is not related to any of the

categories mentioned, such as affected people, coordination & context,

food security & nutrition, geography & infrastructure, health & education,

or population & socio-economy.

Both GPT-3.5-turbo and GPT-4 worked perfectly and identified that our table does not fall into one of the required categories (it’s related to agriculture). I also like the reasoning, which is exactly correct at least in this one example.

Let’s try with a table that is in a supported category, Food prices for Chad as found on the Chad HDX Data Grid. The CSV string for this file, taking the top 20 rows, looks like this …

date,admin1,admin2,market,latitude,longitude,category,commodity,unit,priceflag,pricetype,currency,price,usdprice

#date,#adm1+name,#adm2+name,#loc+market+name,#geo+lat,#geo+lon,#item+type,#item+name,#item+unit,#item+price+flag,#item+price+type,#currency,#value,#value+usd

2003-10-15,Barh El Gazal,Barh El Gazel Sud,Moussoro,13.640841,16.490069,cereals and tubers,Maize,KG,actual,Retail,XAF,134.0,0.2377

2003-10-15,Barh El Gazal,Barh El Gazel Sud,Moussoro,13.640841,16.490069,cereals and tubers,Millet,KG,actual,Retail,XAF,147.0,0.2608

2003-10-15,Lac,Mamdi,Bol,13.5,14.683333,cereals and tubers,Maize,KG,actual,Retail,XAF,81.0,0.1437

2003-10-15,Lac,Mamdi,Bol,13.5,14.683333,cereals and tubers,Maize (white),KG,actual,Retail,XAF,81.0,0.1437

2003-10-15,Logone Occidental,Lac Wey,Moundou,8.5666667,16.0833333,cereals and tubers,Millet,KG,actual,Retail,XAF,95.0,0.1685

2003-10-15,Logone Occidental,Lac Wey,Moundou,8.5666667,16.0833333,cereals and tubers,Sorghum,KG,actual,Retail,XAF,62.0,0.11

2003-10-15,Logone Occidental,Lac Wey,Moundou,8.5666667,16.0833333,cereals and tubers,Sorghum (red),KG,actual,Retail,XAF,62.0,0.11

2003-10-15,Moyen Chari,Barh-K h,Sarh,9.1429,18.3923,cereals and tubers,Millet,KG,actual,Retail,XAF,100.0,0.1774

2003-10-15,Moyen Chari,Barh-K h,Sarh,9.1429,18.3923,cereals and tubers,Sorghum,KG,actual,Retail,XAF,90.0,0.1597

2003-10-15,Moyen Chari,Barh-K h,Sarh,9.1429,18.3923,cereals and tubers,Sorghum (red),KG,actual,Retail,XAF,90.0,0.1597

2003-10-15,Ndjaména,Ndjamena,Ndjamena,12.11,15.04,cereals and tubers,Maize,KG,actual,Retail,XAF,132.0,0.2342

2003-10-15,Ndjaména,Ndjamena,Ndjamena,12.11,15.04,cereals and tubers,Maize (white),KG,actual,Retail,XAF,132.0,0.2342

2003-10-15,Ndjaména,Ndjamena,Ndjamena,12.11,15.04,cereals and tubers,Millet,KG,actual,Retail,XAF,110.0,0.1952

2003-10-15,Ndjaména,Ndjamena,Ndjamena,12.11,15.04,cereals and tubers,Rice (imported),KG,actual,Retail,XAF,396.0,0.7026

2003-10-15,Ndjaména,Ndjamena,Ndjamena,12.11,15.04,cereals and tubers,Rice (local),KG,actual,Retail,XAF,297.0,0.5269

2003-10-15,Ndjaména,Ndjamena,Ndjamena,12.11,15.04,cereals and tubers,Sorghum,KG,actual,Retail,XAF,100.0,0.1774

2003-10-15,Ndjaména,Ndjamena,Ndjamena,12.11,15.04,cereals and tubers,Sorghum (red),KG,actual,Retail,XAF,100.0,0.1774

2003-10-15,Ouaddai,Ouara,Abeche,13.8166667,20.8166667,cereals and tubers,Millet,KG,actual,Retail,XAF,155.0,0.275

2003-10-15,Ouaddai,Ouara,Abeche,13.8166667,20.8166667,cereals and tubers,Sorghum,KG,actual,Retail,XAF,97.0,0.1721

Prompting with the same format we get …

gpt-3.5-turbo Model response:

Yes, the table falls into the category 'Food Security & Nutrition : Food Prices'.

This is because the table contains time series prices for common food

commodities at different locations.

gpt-4 Model response:

Yes, the table falls into the category "Food Security & Nutrition : Food Prices".

This is because the table contains time series prices for common

food commodities (such as Maize, Millet, and Sorghum) at various

locations (markets) with their respective coordinates (latitude and longitude).

The data also includes information on the date, administrative divisions,

and currency.

So again, both models were correct. The category for this dataset is indeed ‘Food Security & Nutrition : Food Prices’.

OK, looking good for some quick examples using a single table. What about identifying the category based on the contents of multiple tables?

Predicting a Dataset Category Using Excerpts from Multiple Tables

In HDX a dataset can have multiple ‘Resources’ (files), and for data in Excel, these files can have multiple tables in sheets. So just looking at just one table from the dataset might not tell the whole story, we need to make a decision based on multiple tables. This is important because among all the tables in a dataset there might be tabs for documentation about the dataset, field lookups, and more, which by themselves wouldn’t be enough to deduce the category of all data in the dataset.

Before ChatGPT API was launched, this would have been difficult due to token limits. However, ChatGPT allows us to specify multiple prompts as well as having increased token limits. As we’ll see, still a limiting factor, but an improvement on previous models.

The sample data for this analysis — provided in the notebook repo — was extracted from HDX by …

- Looping over datasets

- For each dataset loop over files

- For each tabular file, download it

- For each tab in the file create an excerpt of the table (first 20 rows) in CSV format

Note: I haven’t included this code to avoid too much traffic on HDX, but if interested in this code, message me here on Medium.

So each dataset has a field like this …

[

{

"filename":"<DATASET NAME>/<FILE NAME 1>",

"format": "EXCEL",

"sheet": "<SHEET 1>",

"table_excerpt": "<FIRST 20 ROWS OF TABLE IN CSV FORMAT>"

},

{

"filename":"<DATASET NAME>/<FILE NAME 1>",

"format": "EXCEL",

"sheet": "<SHEET 2>",

"table_excerpt": "<FIRST 20 ROWS OF TABLE IN CSV FORMAT>"

},

{

"filename":"<DATASET NAME>/<FILE NAME 2>",

"format": "CSV",

"sheet": "",

"table_excerpt": "<FIRST 20 ROWS OF TABLE IN CSV FORMAT>"

},

{

"filename":"<DATASET NAME>/<FILE NAME 3>",

"format": "EXCEL",

"sheet": "<SHEET 1>",

"table_excerpt": "<FIRST 20 ROWS OF TABLE IN CSV FORMAT>"

},

... etc

]

Having this structure for each dataset allows us to generate multiple prompts for each table …

def predict(data_excerpts, temperature=0.0):

results = []

for index, row in data_excerpts.iterrows():

dataset = row["name"]

title = row["title"]

print(

f"\n========================================= {dataset} =============================================\n"

)

prompts = []

# Start the prompt by defining the categories we want to assign

prompts.append(

f"Here is a list of HDX data categories with their definition: \n\n {category_prompt_text} \n\n"

)

prompts.append(

f"Here are excerpts from all the tables in this dataset: {title} ...\n\n"

)

# Build multiple prompts for each table excerpt for this dataset

tables = row["table_excerpts"]

for table in tables:

table = json.loads(table)

csv_as_str = table["table_excerpt"]

sheet = table["sheet"]

type = table["type"]

filename = table["filename"]

print(f"DATA > {filename} / {sheet}")

prompts.append(

f"Type {type} sheet {sheet} from file {filename} Table excerpt: \n\n {csv_as_str} \n\n"

)

# Finish up with our request

prompts.append(

"Does the dataset fall into exactly one of the categories mentioned above, if not say no. "

"If it does, add a pipe charatcter '|' before and after the top category and sub-category category and explain why it was chosen step-by-step.\n\n"

"What is the second most likely category if you had to pick one (adding a ^ character either side)? \n\n"

)

actual_category = row["datagrid_category"]

d = {

"dataset_name": dataset,

"filename": filename,

"prompts": prompts,

"actual_category": actual_category,

}

# Send our prompt array to two models

for model in ["gpt-3.5-turbo", "gpt-4"]:

# for model in ['gpt-3.5-turbo']:

# GPT-4 is in test and can fail sometimes

try:

print(f"\nCalling model {model}")

response = prompt_model(prompts, temperature=temperature, model=model)

if "|" in response:

predicted_category = response.split("|")[1].strip()

else:

predicted_category = response

print(f"\n{model} Model response: \n\n{response}")

match = actual_category == predicted_category

d[f"{model}_response"] = response

d[f"{model}_predicted_category"] = predicted_category

d[f"{model}_match"] = match

print(

f"******* RESULT: || {match} || prediced {predicted_category}, actual {actual_category} *******"

)

except Exception as e:

print(e)

results.append(d)

results = pd.DataFrame(results)

return results

Which generates prompts like this …

Prompt 1 — Defining the categories

Here is a list of HDX data categories with their definition:

- Category \'Affected People : Internally Displaced Persons\' is defined as: Tabular data of the number of displaced people by location. Locations can be administrative divisions or other locations (such as camps) if an additional dataset defining those locations is also available.\n

- Category \'Affected People : Refugees and Persons of Concern\' is defined as: Tabular data of the number of refugees and persons of concern either in the country or originating from the country disaggregated by their current location. Locations can be administrative divisions or other locations (such as camps) if an additional dataset defining those locations is also available or if the locations\' coordinates are defined in the tabular data.\n

- Category \'Affected People : Returnees\' is defined as: Tabular data of the number of displaced people who have returned.\n

... etc

Prompt 2 — Specifying Dataset name and introducing table excerpts

Here are excerpts from all the tables in this colombia-health-facilities-2021: ...

Prompt 3, 4, etc —Providing Table Excerpts

Type XLSX sheet servicios from file ./data/prompts/colombia-health-facilities-2021/fservicios.xlsx Table excerpt: \

,0,1,2,3,4,5,6,7,8,9,10,11,12,13,14,15,16,17,18,19,20

0,depa_nombre,muni_nombre,habi_codigo_habilitacion,codigo_habilitacion,numero_sede,sede_nombre,nivel,grse_codigo,grse_nombre,serv_codigo,serv_nombre,ambulatorio,hospitalario,unidad_movil,domiciliario,otras_extramural,centro_referencia,institucion_remisora,complejidad_baja,complejidad_media,complejidad_alta

1,Amazonas,LETICIA,9100100019,9100100019,01,E.S.E. HOSPITAL SAN RAFAEL,2,7,Apoyo Diagnóstico y Complementación Terapéutica,706,LABORATORIO CLÍNICO,SI,SI,NO,NO,NO,NO,NO,NO,SI,NO

2,Amazonas,LETICIA,9100100019,9100100019,01,E.S.E. HOSPITAL SAN RAFAEL,2,7,Apoyo Diagnóstico y Complementación Terapéutica,712,TOMA DE MUESTRAS DE LABORATORIO CLÍNICO,SI,SI,SI,NO,NO,NO,NO,SI,NO,NO\n3,Amazonas,LETICIA,9100100019,9100100019,01,E.S.E. HOSPITAL SAN RAFAEL,2,7,Apoyo Diagnóstico y Complementación Terapéutica,714,SERVICIO FARMACÉUTICO,SI,SI,NO,NO,NO,NO,NO,SI,NO,NO

... etc

Type XLSX sheet capacidad from file ./data/prompts/colombia-health-facilities-2021/fcapacidad.xlsx Table excerpt:

,0,1,2,3,4,5,6,7,8,9,10\n0,depa_nombre,muni_nombre,codigo_habilitacion,numero_sede,sede_nombre,nivel,grupo_capacidad,coca_nombre,cantidad,modalidad,modelo

1,Amazonas,EL ENCANTO,9126300019,11,CENTRO DE SALUD SAN RAFAEL - E.S.E HOSPITAL SAN RAFAEL DE LETICIA,2,CAMAS,Pediátrica,1,,0

2,Amazonas,EL ENCANTO,9126300019,11,CENTRO DE SALUD SAN RAFAEL - E.S.E HOSPITAL SAN RAFAEL DE LETICIA,2,CAMAS,Adultos,3,,0

... etc

Final Prompt — Our request to classify the data

Does the dataset fall into exactly one of the categories mentioned above, if not say no.

If it does, add a pipe charatcter '|' before and after the top category and sub-category category and explain why it was chosen step-by-step.

What is the second most likely category if you had to pick one (adding a ^ character either side)?

You’ll notice in the last prompt that we’ve been a bit demanding:

- We request that the model indicates if the data doesn’t align with our categories so we catch negative cases and the model doesn’t try and assign a category to every dataset. Some will fall outside of the approved categories

- Request that category ‘exactly matches’. Without this GPT-3.5-Turbo would merrily construct new ones!

- If the model does identify a category, wrap it in ‘|’ for easier parsing

- We ask the model to provide its reasoning as this has been shown to improve results [3]. It’s also useful to see why the category decision was made to highlight cases of hallucination

- Finally, for our discussion later, we request the second most likely category as well

Also, if you look closely at the code in the predict function, I have used a temperature of 0.0 for this study. Temperature controls how random the output is, and since we want things to be nice and specific rather than text describing quantum physics in the voice of cookie monster, I set it as low as possible.

Making our predictions …

output_folder = "./data/prompts"

data_excerpts = pd.read_pickle(f"{output_folder}/datasets_excerpts.pkl")

data_excerpts = data_excerpts[data_excerpts["is_datagrid"] == True]

data_excerpts = data_excerpts.sample(min(150, data_excerpts.shape[0]), random_state=42)

results = predict(data_excerpts, temperature=0.0)

results.to_excel(f"{output_folder}/results.xlsx")

How did we do?

def output_prediction_metrics(results, prediction_field="predicted_post_processed", actual_field="actual_category"):

"""

Prints out model performance report if provided results.

Parameters

----------

results : list

Where each element has fields as defined by ...

prediction_field : str

Field name of element with prediction

actual_field : str

Field name of element with actual value

"""

y_test = []

y_pred = []

for index, r in results.iterrows():

if actual_field not in r:

print("Provided results do not contain expected values.")

sys.exit()

y_pred.append(r[prediction_field])

y_test.append(r[actual_field])

print(f"Results for {prediction_field}, {len(results)} predictions ...\n")

print(f"Accuracy: {round(accuracy_score(y_test, y_pred),2)}")

print(

f"Precision: {round(precision_score(y_test, y_pred, average='weighted', zero_division=0),2)}"

)

print(

f"Recall: {round(recall_score(y_test, y_pred, average='weighted', zero_division=0),2)}"

)

print(

f"F1: {round(f1_score(y_test, y_pred, average='weighted', zero_division=0),2)}"

)

return

results.fillna("", inplace=True)

print("\ngpt-3.5-turbo ...")

output_prediction_metrics(results, prediction_field="gpt-3.5-turbo_predicted_category", actual_field="actual_category")

print("\ngpt-4 ...")

output_prediction_metrics(results, prediction_field="gpt-4_predicted_category", actual_field="actual_category")

Note: Though we passed in 150 datasets to predict, the API timed out quite a bit for GPT-4 and calls weren’t retried to save costs. This is entirely to be expected for GPT-4 which is in early preview. Some prompts also exceeded the token length for GPT-3.5-Turbo. The following results therefore apply to 53 predictions made by both GPT-3.5-turbo and GPT-4.

For predicting the category only, for example, “Coordination & Context” when the full category and sub-category is “Coordination & Context : Humanitarian Access” …

Results for gpt-3.5-turbo_predicted_category_1, 53 predictions ...

Accuracy: 0.66

Precision: 0.86

Recall: 0.66

F1: 0.68

Results for gpt-4_predicted_category_1, 53 predictions ...

Accuracy: 0.96

Precision: 0.97

Recall: 0.96

F1: 0.96

GPT-4 was nearly always able to identify the correct category (96% accuracy), performing significantly better than GPT-3.5-turbo for the same prompts (66% accuracy).

For predicting the whole category and sub-category together …

Results for gpt-3.5-turbo_predicted_category, 53 predictions ...

Accuracy: 0.57

Precision: 0.73

Recall: 0.57

F1: 0.60

Results for gpt-4_predicted_category, 53 predictions ...

Accuracy: 0.89

Precision: 0.92

Recall: 0.89

F1: 0.89

Again GPT-4 outperformed GPT-3.5 by a significant margin. 89% accuracy is actually pretty good given ….

- We only provided a set of text rules and didn’t label data, train a classifier or provide any examples.

In fact, if we look at the examples where it failed the predictions …

df = results.loc[results["gpt-4_match"] == False]

for index, row in df.iterrows():

response = row["gpt-4_response"]

predicted_second_category = response.split("^")[1].strip()

print(f"Dataset: {row['dataset_name']}")

# print(f"Dataset: {row['filename']}")

print("")

print(f"Actual: {row['actual_category']}")

print(f"Predicted category: {row['gpt-4_predicted_category']}")

print(f"Predicted second category: {predicted_second_category}\n")

print(

f"Secondary category matched: {predicted_second_category == row['actual_category']}"

)

print("=====================================================")

We get …

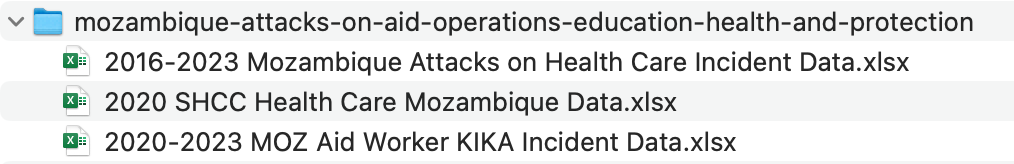

Dataset: mozambique-attacks-on-aid-operations-education-health-and-protection

Actual: Coordination & Context : Humanitarian Access

Predicted category: Coordination & Context : Conflict Events

Predicted second category: Health & Education : Health Facilities

Secondary category matched: False

=====================================================

Dataset: iraq-violence-against-civilians-and-vital-civilian-facilities

Actual: Coordination & Context : Humanitarian Access

Predicted category: Coordination & Context : Conflict Events

Predicted second category: Affected People : Humanitarian Needs

Secondary category matched: False

=====================================================

Dataset: south-sudan-access-incidents

Actual: Coordination & Context : Conflict Events

Predicted category: Coordination & Context : Humanitarian Access

Predicted second category: Coordination & Context : Conflict Events

Secondary category matched: True

=====================================================

Dataset: somalia-displacement-idps-returnees-baseline-assessment-iom-dtm

Actual: Affected People : Returnees

Predicted category: Affected People : Internally Displaced Persons

Predicted second category: Affected People : Returnees

Secondary category matched: True

=====================================================

Dataset: ukraine-border-crossings

Actual: Coordination & Context : Humanitarian Access

Predicted category: Geography & Infrastructure : Populated Places

Predicted second category: Coordination & Context : Humanitarian Access

Secondary category matched: True

=====================================================

Dataset: northeast-nigeria-displacement-for-borno-adamawa-and-yobe-states-bay-state

Actual: Affected People : Returnees

Predicted category: Affected People : Internally Displaced Persons

Predicted second category: Affected People : Returnees

Secondary category matched: True

=====================================================

Dataset: somalia-acute-malnutrition-burden-and-prevalence

Actual: Food Security & Nutrition : Acute Malnutrition

Predicted category: Affected People : Acute Malnutrition

Predicted second category: Food Security & Nutrition : Food Security

Secondary category matched: False

=====================================================

Dataset: colombia-people-in-need-pin-del-cluster-en-seguridad-alimentaria-y-nutricion-san-sp

Actual: Food Security & Nutrition : Food Security

Predicted category: Affected People : Humanitarian Needs

Predicted second category: Coordination & Context : 3W - Who is doing what where

Secondary category matched: False

=====================================================

Dataset: sind-safeguarding-healthcare-monthly-news-briefs-dataset

Actual: Coordination & Context : Humanitarian Access

Predicted category: Coordination & Context : Conflict Events

Predicted second category: Affected People : Humanitarian Needs

Secondary category matched: False

=====================================================

A couple of things jump out. Datasets like ‘mozambique-attacks-on-aid-operations-education-health-and-protection’, have a mix of data files related to both healthcare and attacks.

So assuming one category per dataset might not be the best way to frame the problem, datasets are reused across categories.

In about half the cases where GPT-4 was incorrect, the second-place category it predicted was correct. Looking at the model output for one of these cases, Ukranian border crossings …

Yes, the dataset falls into exactly one of the categories mentioned above.

|Geography & Infrastructure : Populated Places|

The dataset contains information about border crossings in Ukraine,

including their names in English and Ukrainian, the country they connect to,

and their latitude and longitude coordinates. This information is related to

populated places (border crossings) and their geographic locations, which is why the "Geography & Infrastructure : Populated Places" category is the most appropriate.

^Coordination & Context : Humanitarian Access^

The second most likely category would be "Coordination & Context : Humanitarian Access"

border crossings can be considered as points of access between countries, and

understanding their locations could be relevant in the context of humanitarian

interventions. However, this category is not as fitting as the first one

since the dataset does not specifically describe access constraints or

permissions related to humanitarian interventions.

What is pretty cool is its explanation of why it didn’t choose ‘Coordination & Context : Humanitarian Access’, because ‘…it does not specifically focus on access constraints’. Here is the category definition …

Coordination & Context : Humanitarian Access: Tabular or vector data describing the location of natural hazards, permissions, active fighting, or other access constraints that impact the delivery of humanitarian interventions.

So GPT-4 seems to be following the category rule to the word. There is some more nuance to the classification that the HDX team applies where border-crossing datasets are very reasonably related to humanitarian access. So perhaps one way to improve model prediction in this particular case would be to add additional text to the category definition indicating border crossing can relate to humanitarian access.

The takeaway here is that GPT-4 performed amazingly well and that the few incorrect predictions were due to how the problem was framed poorly by me (datasets can have multiple categories) and perhaps text used to define categories.

Conclusion

The technique seems to be quite promising. We were able to achieve some good results without any requirement to set labels, train models, or provide examples in prompts. Additionally, the data summarization capabilities of models like GPT-4 are really impressive, helping with debugging model predictions, and might also be a nice way to provide quick data overviews.

However, there are some caveats:

- The amount of data used for this study was very limited due to cost and the fact GPT-4 is only in an early preview. Future studies would need to use more data of course.

- Prompt length is currently a limiting factor, the study above only included datasets with less than 4 tables to avoid breaching token limits when prompting with table excerpts. HDX datasets can have more tables than this and having larger table excerpts might have been desirable in some cases. Vendors such as OpenAI seem to be progressively increasing token limits, so over time perhaps this becomes less of an issue.

- Likely related to being an early preview, GPT-4 model performance was very slow, taking 20 seconds per prompt to complete.

- The framing of the problem was not ideal, for example, assuming a dataset can only have one category. It sufficed to illustrate the potential of Large Language Models for assessing data quality and summarization, but a slightly different approach in the future might yield better results. For example, predicting top dataset candidates for a given category per country for datasets on the HDX platform.

Being able to specify data tests and questions about data in natural language is still pretty cool though!

References

[1] OCHA, State of Open Humanitarian Data 2023

[2] Brown et al, Language Models are Few Shot Learners (2020).

[3] Kojima et al, Large Language Models are Zero-shot reasoners.

Schopf et al, Evaluating Unsupervised Text Classification: Zero-Shot and similarity-based approaches

Code for this analysis can be found in this notebook.

Using GPT-3.5-Turbo and GPT-4 to Apply Text-defined Data Quality Checks on Humanitarian Datasets was originally published in Towards Data Science on Medium, where people are continuing the conversation by highlighting and responding to this story.

...

800+ IT

News

als RSS Feed abonnieren

800+ IT

News

als RSS Feed abonnieren