📚 Why You Should Use Caching - Improve User Experience and Reduce Costs

💡 Newskategorie: Programmierung

🔗 Quelle: dev.to

Today, we're diving into the world of caching. Caching is a secret weapon for building scalable, high-performance systems. There are many types of caching, but in this article, we'll focus on backend object caching (backend caching). Mastering it will help you to build high performance and reliable software.

In this article, we'll be exploring:

- What is Caching? We'll explore caching and explain how it temporarily stores data for faster access.

- Benefits of Caching : Discover how caching boosts speed, reduces server load, improves user experience, and can even cut costs.

- Caching Pattern : In this section, we'll dive into different ways to use the cache. Remember, there are pros and cons to each approach, so make sure to pick the right pattern for your needs!

- Caching Best Practice : Now you know how to store and retrieve cached data. But how do you ensure your cached data stays up-to-date? And what happens when the cache reaches its capacity?

- When Not To Cache : While caching offers many benefits, there are times when it's best avoided. Implementing caching in the wrong system can increase complexity and potentially even slow down performance.

What is Caching

Creating a high-performance and scalable application is all about removing bottlenecks and making the system more efficient. Databases often bottleneck system performance due to their storage and processing requirements. This makes them a costly component because they need to be scaled up often.

Thankfully, there's a component that can help offload database resource usage while improving data retrieval speed – that component is called cache.

Cache is a temporary storage designed for fast write and read of data. It uses low-latency memory storage and optimized data structures for quick operations. Chances are you've already used Redis or Memcached, or at least heard their names. These are two of the most popular distributed caching systems for backend services. Redis can even act as a primary database, but that's a topic for another article!

Benefits of Caching

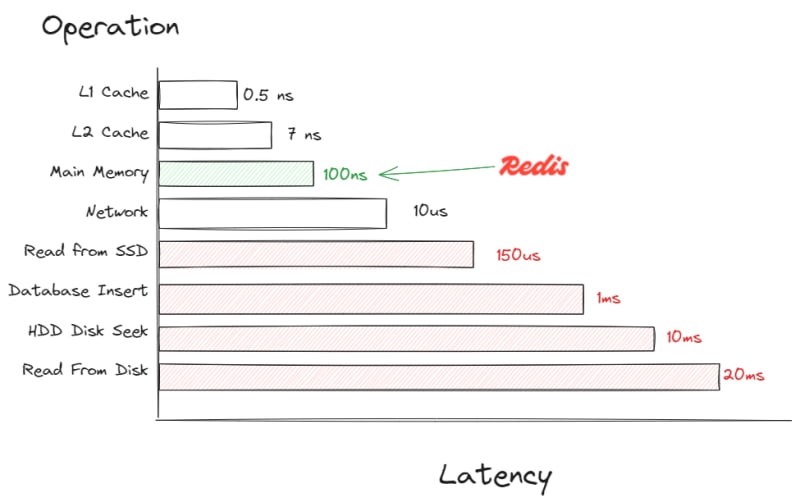

Latencies every developer should know

The main benefit of caching is its speed. Reading data from a cache is significantly faster than retrieving it from a database (like SQL or Mongo). This speed comes from caches using dictionary (or HashMap) data structures for rapid operations and storing data in high-speed memory instead of on disk.

Secondly, caching reduces the load on your database. This allows applications to get the data they need from the cache instead of constantly hitting the database. This dramatically decreases hardware resource usage; instead of searching for data on disk, your system simply accesses it from fast memory.

These benefits directly improve user experience and can lead to cost savings. Your application responds much faster, creating a smoother and more satisfying experience for users.

Caching reduces infrastructure costs. While a distributed system like Redis requires its own resources, the overall savings are often significant. Your application accesses data more efficiently, potentially allowing you to downscale your database. However, this comes with a trade-off: if your cache system fails, ensure your database is prepared to handle the increased load.

Cache Patterns

Now that you understand the power of caching, let's dive into the best ways to use it! In this section, we'll explore two essential categories of patterns: Cache Writing Patterns and Cache Miss Patterns. These patterns provide strategies to manage cache updates and handle situations when the data you need isn't yet in the cache.

Writing Patterns

Writing patterns dictate how your application interacts with both the cache and your database. Let's look at three common strategies: Write-back , Write-through , and Write-around. Each offers unique advantages and trade-offs:

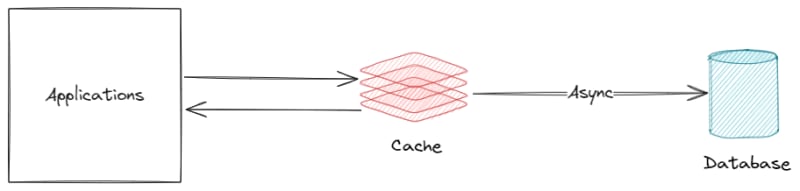

Write Back

How it works:

- Your application interacts only with the cache.

- The cache confirms the write instantly.

- A background process then copies the newly written data to the database.

Ideal for: Write-heavy applications where speed is critical, and some inconsistency is acceptable for the sake of performance. Examples include metrics and analytics applications.

Advantages:

- Faster reads: Data is always in the cache for quick access, bypassing the database entirely.

- Faster writes: Your application doesn't wait for database writes, resulting in faster response times.

- Less database strain: Batched writes reduce database load and can potentially extend the lifespan of your database hardware.

Disadvantages:

- Risk of data loss: If the cache fails before data is saved to the database, information can be lost. Redis mitigates this risk with persistent storage, but this adds complexity.

- Increased complexity: You'll need a middleware to ensure the cache and database eventually stay in sync.

- Potential for high cache usage: All writes go to the cache first, even if the data isn't frequently read. This can lead to high storage consumption.

Write Through

How it works:

- Your application writes to both the cache and the database simultaneously.

- To reduce wait time, you can write to the cache asynchronously. This allows your application to signal successful writes before the cache operation is completely finished.

Advantages:

- Faster reads: Like Write-Back, data is always in the cache, eliminating the need for database reads.

- Reliability: Your application only confirms a write after it's saved in the database, guaranteeing data persistence even if a crash occurs immediately afterward.

Disadvantages:

- Slower writes: Compared to Write-Back, this policy has some overhead because the application waits for both the database and cache to write. Asynchronous writes improve this but remember, there's always the database wait time.

- High cache usage: All writes go to the cache, potentially consuming storage even if the data isn't frequently accessed.

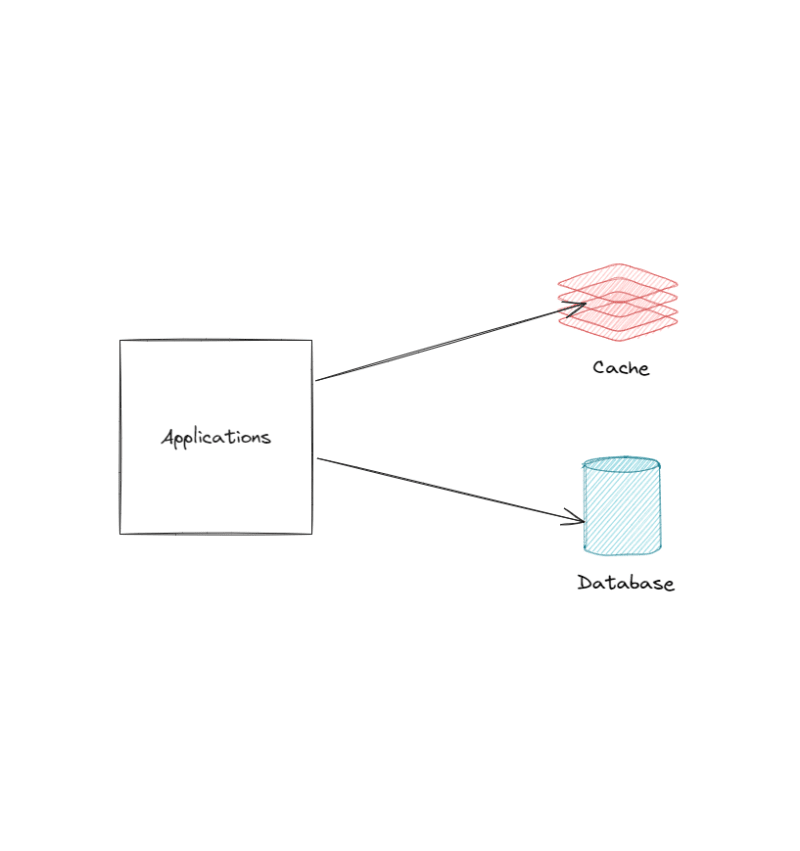

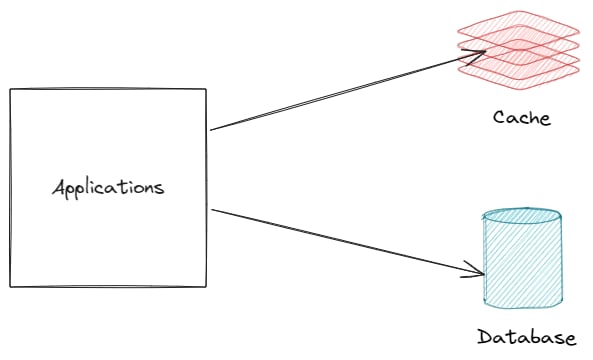

Write Around

With Write-Around, your application writes data directly to the database, bypassing the cache during the write process. To populate the cache, it employs a strategy called the cache-aside pattern :

- Read request arrives: The application checks the cache.

- Cache miss: If the data isn't found in the cache, the application fetches it from the database and then stores it in the cache for future use.

Advantages:

- Reliable writes: Data is written directly to the database, ensuring consistency.

- Efficient cache usage: Only frequently accessed data is cached, reducing memory consumption.

Disadvantages:

- Higher read latency (in some cases): If data isn't in the cache, the application must fetch it from the database, adding a roundtrip compared to policies where the cache is always pre-populated.

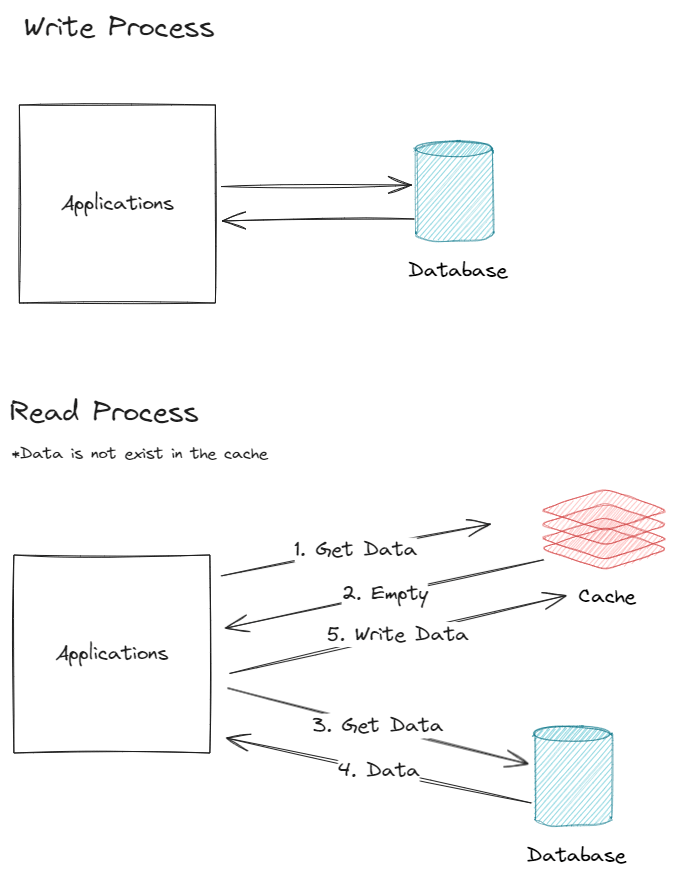

Cache Miss Pattern

A cache miss occurs when the data your application needs isn't found in the cache. Here are two common strategies to tackle this:

-

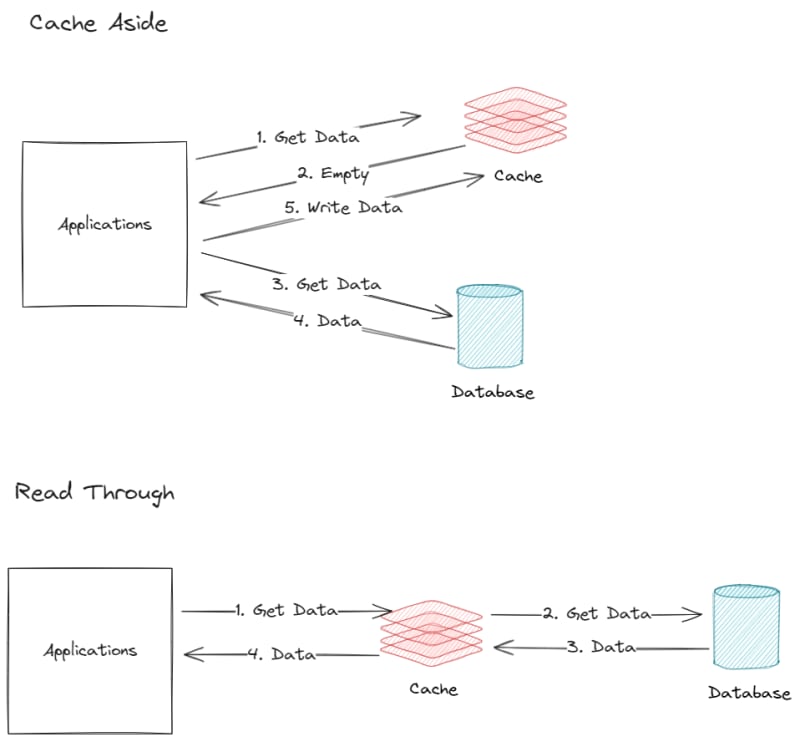

Cache-Aside

- The application checks the cache.

- On a miss, it fetches data from the database and then updates the cache.

- Key point: The application is responsible for managing the cache.

Using Cache-Aside pattern means your application will manage the cache. This approach is the most common to use because it's simple and don't need development in places other than the application

-

Read-Through

- The application makes a request, unaware of the cache.

- A specialized mechanism checks the cache and fetches data from the database if needed.

- The cache is updated transparently.

Read-through pattern reduce application complexity, but it increase infrastructure complexity. It help to offload the application resource to the middleware instead.

Overall, the write-around pattern with cache-aside is most commonly used because of its ease of implementation. However, I recommend to also include the write-through pattern if you have any data that will be used immediately after it's cached. This will provide a slight benefit to read performance.

Caching Best Practice

In this section, we'll explore best practices for using a cache. Following these practices will ensure your cache maintains fresh data and manages its storage effectively.

Cache Invalidation

Imagine you've stored data in the cache, and then the database is updated. This causes the data in the cache to differ from the database version. We call this type of cache data "stale." Without a cache invalidation technique, your cached data could remain stale after database updates. To keep data fresh, you can use the following techniques:

- Cache Invalidation on Update: When you update data in the database, update the corresponding cache entry as well. Write-through and write-back patterns inherently handle this, but write-around/cache-aside requires explicit deletion of the cached data. This strategy prevents your application from retrieving stale data.

- Time To Live (TTL): TTL is a policy you can set when storing data in the cache. With TTL, data is automatically deleted after a specified time. This helps clear unused data and provides a failsafe against stale data in case of missed invalidations.

Cache Replacement Policies

If you cache a large amount of data, your cache storage could fill up. Cache systems typically use memory, which is often smaller than your primary database storage. When the cache is full, it needs to delete some data to make room. Cache replacement policies determine which data to remove:

- Least Recently Used (LRU): This common policy evicts data that hasn't been used (read or written) for the longest time. LRU is suitable for most real-world use cases.

- Least Frequently Used (LFU): Similar to LRU, but focuses on access frequency. Newly written data might be evicted, so consider adding a warm-up period during which data cannot be deleted.

Other replacement policies like FIFO (First-In, First-Out), Random Replacement, etc., exist, but are less common.

When Not To Cache

Before diving into cache implementation, it's important to know when it might not be the best fit. Caching often improves speed and reduces database load, but it might not make sense if:

- Low traffic: If your application has low traffic and the response time is still acceptable, you likely don't need caching yet. Adding a cache increases complexity, so it's best implemented when you face performance bottlenecks or anticipate a significant increase in traffic.

- Your system is write-heavy: Caching is most beneficial in read-heavy applications. This means data in your database is updated infrequently or read multiple times between updates. If your application has a high volume of writes, caching could potentially add overhead and slow things down.

Takeaways

In this article, we've covered the basics of caching and how to use it effectively. Here's a recap of the key points:

- Confirm the Need: Ensure your system is read-heavy and requires the latency reduction caching offers.

- Choose Patterns Wisely: Select cache writing and cache miss patterns that align with how your application uses data.

- Data Freshness: Implement cache invalidation strategies to prevent serving stale data.

- Manage Replacement Policy: Choose a cache replacement policy (like LRU) to handle deletions when the cache reaches its capacity.

References

- https://gist.github.com/jboner/2841832

- https://www.bytesizedpieces.com/posts/cache-types

- https://www.techtarget.com/searchstorage/definition/cache

- https://www.youtube.com/watch?v=dGAgxozNWFE

800+ IT

News

als RSS Feed abonnieren

800+ IT

News

als RSS Feed abonnieren