📚 Using LLMs to evaluate LLMs

💡 Newskategorie: AI Nachrichten

🔗 Quelle: towardsdatascience.com

You can ask ChatGPT to act in a million different ways: as your nutritionist, language tutor, doctor, etc. It’s no surprise we see a lot of demos and products launching on top of the OpenAI API. But while it’s easy to make LLMs act a certain way, ensuring they perform well and accurately complete the given task is a completely different story.

The problem is that many criteria that we care about are extremely subjective. Are the answers accurate? Are the responses coherent? Was anything hallucinated? It’s hard to build quantifiable metrics for evaluation. Mostly, you need human judgment, but it’s expensive to have humans check a large number of LLM outputs.

Moreover, LLMs have a lot of parameters that you can tune. Prompt, temperature, context, etc. You can fine-tune the models on a specific dataset to fit your use case. With prompt engineering, even asking a model to take a deep breath [1] or making your request more emotional [2] can change performance for the better. There is a lot of room to tweak and experiment, but after you change something, you need to be able to tell if the system overall got better or worse.

With human labour being slow and expensive, there is a strong incentive to find automatic metrics for these more subjective criteria. One interesting approach, which is gaining popularity, is using LLMs to evaluate the output from LLMs. After all, if ChatGPT can generate a good, coherent response to a question, can it also not say if a given text is coherent? This opens up a whole box of potential biases, techniques, and opportunities, so let’s dive into it.

LLM biases

If you have a negative gut reaction about building metrics and evaluators using LLMs, your concerns are well-founded. This could be a horrible way to just propagate the existing biases.

For example, in the G-Eval paper, which we will discuss in more detail later, researchers showed that their LLM-based evaluation gives higher scores to GPT-3.5 summaries than human-written summaries, even when human judges prefer human-written summaries.

Another study, titled “Large Language Models are not Fair Evaluators” [3], found that when asked to choose which of the two presented options is better, there is a significant bias in the order in which order you present the options. GPT-4, for example, often preferred the first given option, while ChatGPT the second one. You can just ask the same question with the order flipped and see how consistent LLMs are in their answers. They subsequently developed techniques to mitigate this bias by running the LLM multiple times with different orders of options.

Evaluating the evaluators

At the end of the day, we want to know if LLMs can perform as well as or similarly to human evaluators. We can still approach this as a scientific problem:

- Set up evaluation criteria.

- Ask humans and LLMs to evaluate according to the criteria.

- Calculate the correlation between human and LLM evaluation.

This way, we can get an idea of how closely LLMs resemble human evaluators.

Indeed, there are already several studies like this, showing that for certain tasks, LLMs do a much better job than more traditional evaluation metrics. And it’s worth noting that we don’t need a perfect correlation. If we evaluate over many examples, even if the evaluation isn’t perfect, we could still get some idea of whether the new system is performing better or worse. We could also use LLM evaluators to flag the worrying edge cases for human evaluators.

Let’s have a look at some of the recently proposed metrics and evaluators that rely on LLMs at their core.

G-Eval

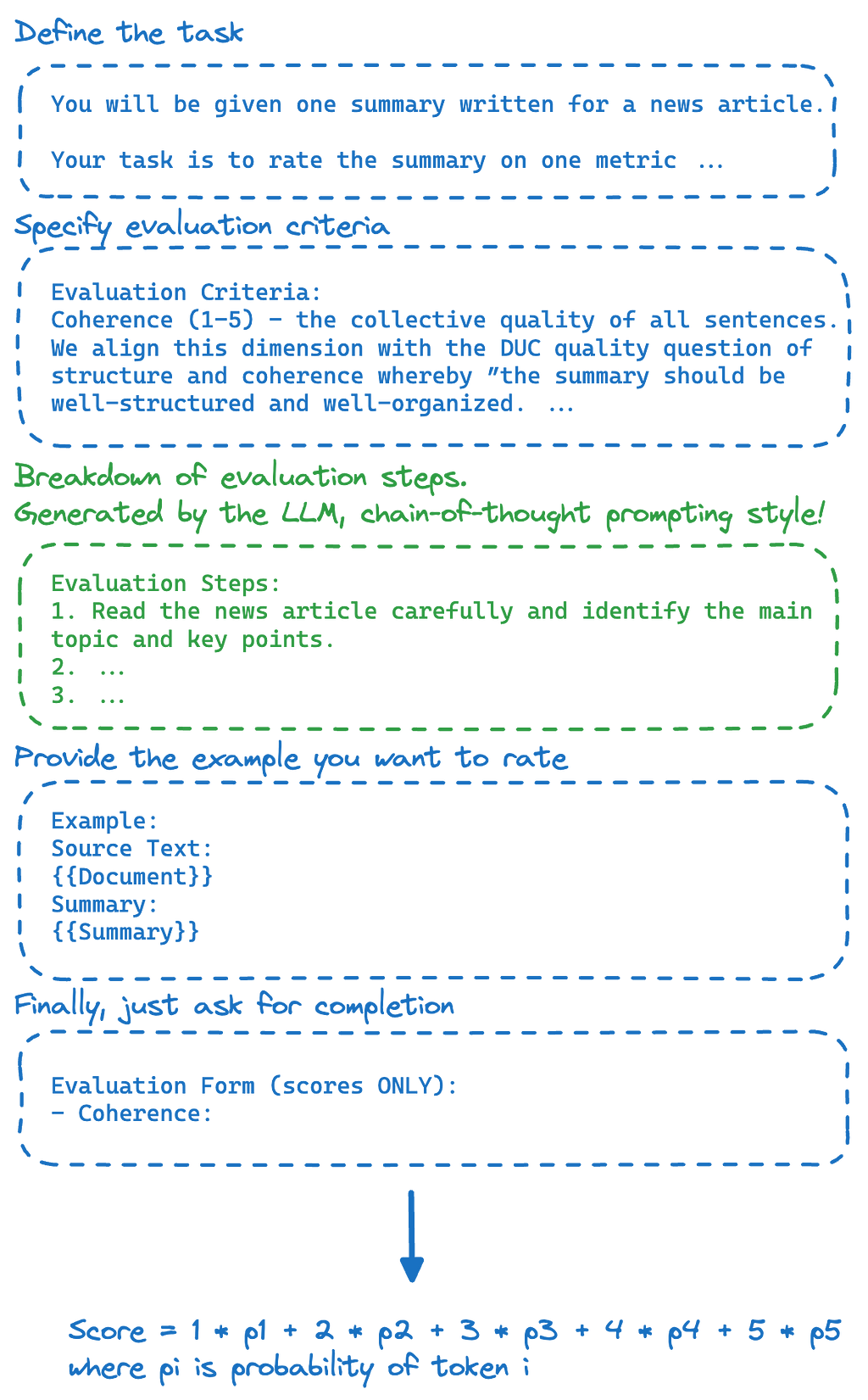

G-Eval [4] works by first outlining the evaluation criteria and then simply asking the model to give the rating. It could be used for summarisation and dialogue generation tasks, for example.

G-Eval has the following components:

- Prompt. Defines the evaluation task and its criteria.

- Intermediate instructions. Outlines the intermediate instructions for evaluation. They actually ask the LLM to generate these steps.

- Scoring function. Instead of taking the LLM score at face value, we look under the hood at the token probabilities to get the final score. So, if you ask to rate between 1 and 5, instead of just taking whatever number is given by the LLM (say “3”), we would look at the probability of each rank and calculate the weighted score. This is because researchers found that usually one digit dominates the evaluation (e.g. outputting mostly 3), and even when you ask the LLM to give a decimal value, it still tends to return integers.

G-Eval was found to significantly outperform traditional reference-based metrics, such as BLEU and ROUGE, which had a relatively low correlation with human judgments. On the surface, it looks pretty straightforward, as we just ask the LLM to perform the evaluation. We could also try to break down the tasks into smaller components.

FactScore

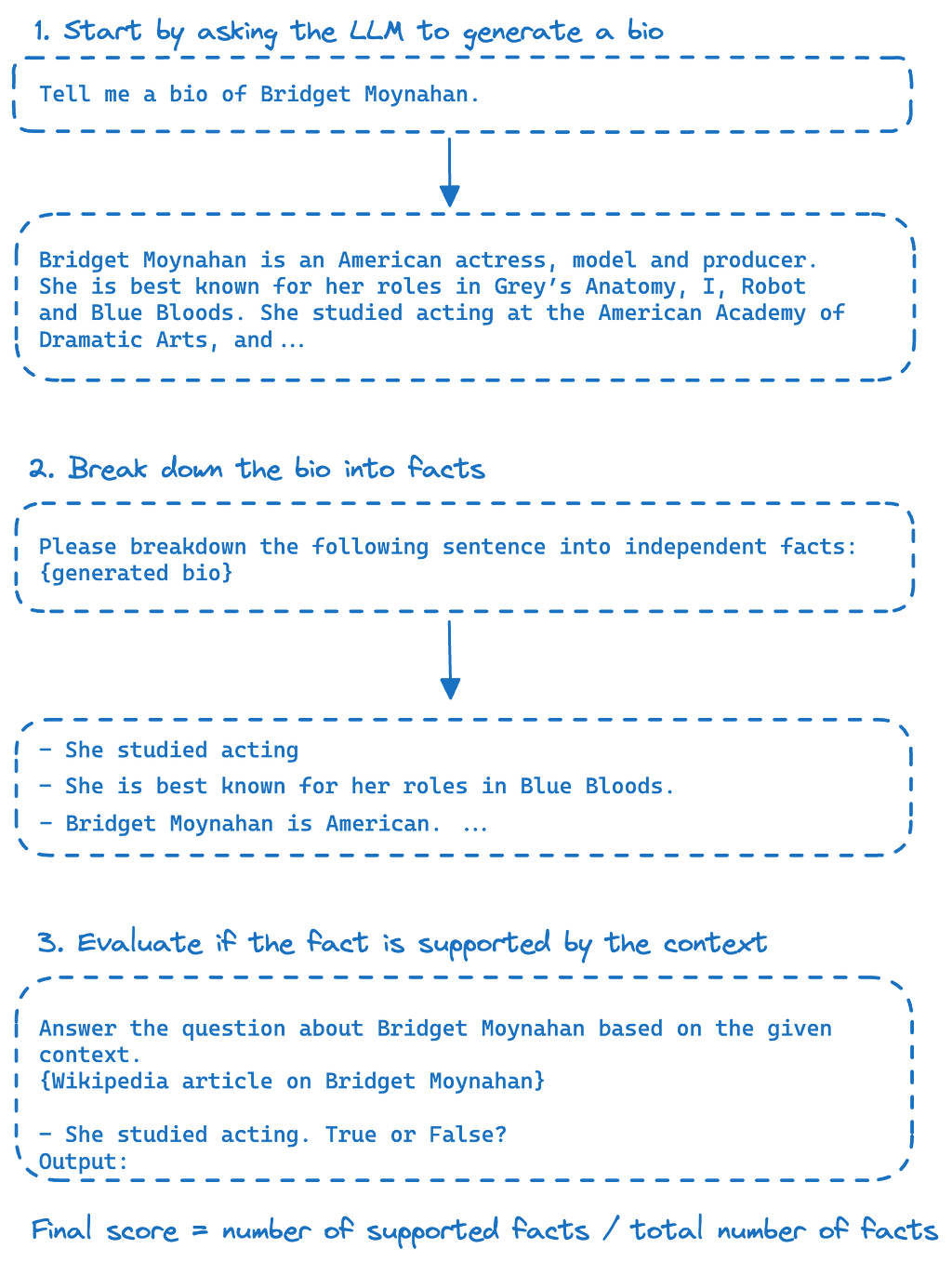

FactScore (Factual precision in Atomicity Score) [5] is a metric for factual precision. The two key ideas there are to treat atomic facts as a unit and to base trustfulness on a particular knowledge source.

For evaluation, you break down the generation into small “atomic“ facts (e.g. “He was born in New York”) and then check for each fact if it is supported by the given ground-truth knowledge source. The final score is calculated by dividing the number of supported facts by the total number of facts.

In the paper, the researchers asked LLMs to generate biographies of people and then used Wikipedia articles about them as the source of truth. The error rate for LLMs doing the same procedure as humans was less than 2%.

RAGAS

Now, let’s have a look at some metrics for retrieval-augmented generation (RAG). With RAG, you first retrieve the relevant context in an external knowledge base and then ask the LLM to answer the question based on those facts.

RAGAS (Retrieval Augmented Generation Assessment) [6] is a new framework for evaluating RAGs. It’s not a single metric but rather a collection of them. The three proposed in the paper are faithfulness, answer relevance, and context relevance. These metrics perfectly illustrate how you can break down evaluation into simpler tasks for LLMs.

Faithfulness measures how grounded the answers are in the given context. It’s very similar to FactScore, in that you first break down the generation into the set of statements and then ask the LLM if the statement is supported by the given context. The score is the number of supported statements divided by the number of all statements. For faithfulness, researchers found a very high correlation to human annotators.

Answer relevance tries to capture the idea that the answer addresses the actual question. You start by asking the LLM to generate questions based on the answer. For each generated question, you can calculate the similarity (by creating an embedding and using cosine similarity) between the generated question and the original question. By doing this n times and averaging out the similarity scores, you get the final value for answer relevance.

Context relevance refers to how relevant the provided context is. Meaning, the provided context contains only the information that is needed to answer the question. In the ideal case, we give the LLM the right information to answer the question and only that. Context relevance is calculated by asking the LLM to extract the sentences in the given context that were relevant to the answer. Then just divide the number of relevant sentences by the total number of sentences to get the final score.

You can find further metrics and explanations (along with the open-sourced GitHub repo) here.

The key point is that we can transform evaluation into a smaller subproblem. Instead of asking if the entire text is supported by the context, we ask only if a small specific fact is supported by the context. Instead of directly giving a number for if the answer is relevant, we ask LLM to think up a question for the given answer.

Conclusion

Evaluating LLMs is an extremely interesting research topic that will get more and more attention as more systems start reaching production and are also applied in more safety-critical settings.

We could also use these metrics to monitor the performance of LLMs in production to notice if the quality of outputs starts degrading. Especially for applications with high costs of mistakes, such as healthcare, it will be crucial to develop guardrails and systems to catch and reduce errors.

While there are definitely biases and problems with using LLMs as evaluators, we should still keep an open mind and approach it as a research problem. Of course, humans will still be involved in the evaluation process, but automatic metrics could help partially assess the performance in some settings.

These metrics don’t always have to be perfect; they just need to work well enough to guide the development of products in the right way.

Special thanks to Daniel Raff and Yevhen Petyak for their feedback and suggestions.

Originally published on Medplexity substack.

- Yang, Chengrun, et al. Large Language Models as Optimizers. arXiv, 6 Sept. 2023. arXiv.org, https://doi.org/10.48550/arXiv.2309.03409.

- Li, Cheng, et al. Large Language Models Understand and Can Be Enhanced by Emotional Stimuli. arXiv, 5 Nov. 2023. arXiv.org, https://doi.org/10.48550/arXiv.2307.11760.

- Wang, Peiyi, et al. Large Language Models Are Not Fair Evaluators. arXiv, 30 Aug. 2023. arXiv.org, https://doi.org/10.48550/arXiv.2305.17926.

- Liu, Yang, et al. G-Eval: NLG Evaluation Using GPT-4 with Better Human Alignment. arXiv, 23 May 2023. arXiv.org, https://doi.org/10.48550/arXiv.2303.16634.

- Min, Sewon, et al. FActScore: Fine-Grained Atomic Evaluation of Factual Precision in Long Form Text Generation. arXiv, 11 Oct. 2023. arXiv.org, https://doi.org/10.48550/arXiv.2305.14251.

- Es, Shahul, et al. RAGAS: Automated Evaluation of Retrieval Augmented Generation. 1, arXiv, 26 Sept. 2023. arXiv.org, https://doi.org/10.48550/arXiv.2309.15217.

Using LLMs to evaluate LLMs was originally published in Towards Data Science on Medium, where people are continuing the conversation by highlighting and responding to this story.

...

800+ IT

News

als RSS Feed abonnieren

800+ IT

News

als RSS Feed abonnieren