📚 Concurrency in golang with goroutines, threads and process.

💡 Newskategorie: Programmierung

🔗 Quelle: dev.to

Hi guys, hope that everyone is fine, today we will talk about concurrency in Golang, we will see how the language deal with this type of process, with the goroutines, what the difference between threads and goroutines and more.

First we need to look back and review the concepts of process and threads to understand what is a goroutine. So let's go end the cheap chat and start this.

Process

A process is any instance of a computer program's execution. It is within the process that resources are allocated and managed by the operating system. Imagine that you open a program like Vscode, this program will consume memory resources and CPU time, will receive a process identifier (PID), and will be executed on your machine. To see some process in your machine, just type this command htop in your terminal.

Thread

A thread can be conceptualized as a sequence of small, executable steps within a process, allowing us to examine the process in finer detail. Unlike processes, which typically do not share resources with others unless specific mechanisms like sockets, IPC (Inter-Process Communication), or pipes are employed, threads within the same process inherently share resources. Each thread has its own thread of execution, but they collectively utilize the same memory and resources allocated to their parent process. This architecture facilitates efficient resource management and execution performance, as threads operate under a shared environment without compromising the organization and efficiency of the overall process.

Why process don't share resources and threads do?

Among the various reasons for not deliberately sharing resources between processes naturally, the most common and frequently encountered in the past was the writing of data from one process into the memory of another, leading to the loss of information. Imagine you are working in your favorite IDE, creating an endpoint in C, and you pause to respond to an email. When you save and send that email, the email client process writes data into the memory space allocated for your IDE, and your endpoint turns into an email message confirming a meeting, or even worse, causes a memory overflow resulting in a Buffer Overflow in the process. This can lead to the process being unexpectedly terminated.

Concurrency

Concurrency is the ability of a computer to execute multiple processes simultaneously, but not at the exact same moment. When using various processes of a computer, it's common to get the impression that it is executing everything like movies, audio, images, or texts all at the same time, but this is not what actually happens. To handle multiple processes, most machines use some form of concurrency technique, which involves interleaving execution. In this way, a computer deals with a queue composed of fragments from various different processes.

Goroutines: How Go Handles Concurrency

Initially, a goroutine is similar in concept to a thread, meaning it's a segment of a process that is being executed. However, goroutines stand out due to some key characteristics.

- Small initial size: 2Kb-4Kb, unlike a thread which typically starts with 2Mb.

- Dynamic size variation: when a goroutine exceeds its initial size, its data is allocated to another, larger goroutine.

- Segmented memory allocation: In cases where a goroutine requires significantly more space than usual, the runtime can allocate sections of these goroutines into other, discontinuous empty memory spaces. This ensures rapid memory allocation and avoids overloading the system

Hint: Runtime refers to some grouped functionalities that manage functions such as garbage collector, goroutines scheduler, panic error handling and other low-level system functions.

Creating a goroutine

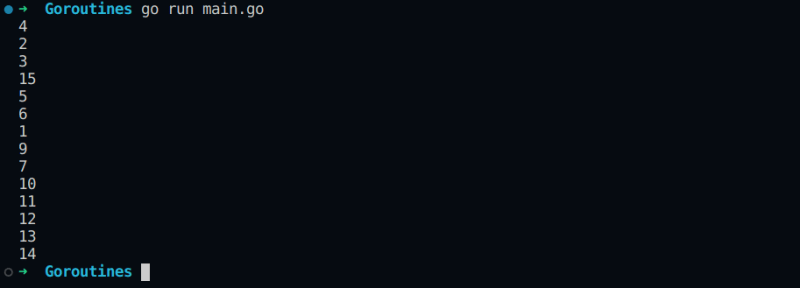

Let's start by creating our file main.go and create a function named printMessage, which takes an argument and prints it. We will call this function inside the main function with the 'go' keyword preceding it and pass the element of an iteration as an argument. The go keyword indicates that the function will be executed asynchronously, meaning a goroutine will be created for it, and the function will run in the background. By using the command 'go run main.go', we can see the result in the terminal below.

package main

import (

"fmt"

"strconv"

)

func main(){

for i :=0; i<50; i++{

go printMessage(strconv.Itoa(i));

}

}

func printMessage(i string){

fmt.Println(i)

}

So nothing new so far, but when we add a normal print below the printMessage function and changes the numbers of iterations to 10, we will have another result when running the program again.

As we can see, only the print statement outside of the printMessage function was called. This happens because when the task is sent to the background, the rest of the code in the main function continues to execute synchronously. This means that the regular print statements are executed quickly. Once the execution of the synchronous prints is complete, the main function ends, and any processes that were running in the background are also terminated, even if they are still in a queue waiting for execution.

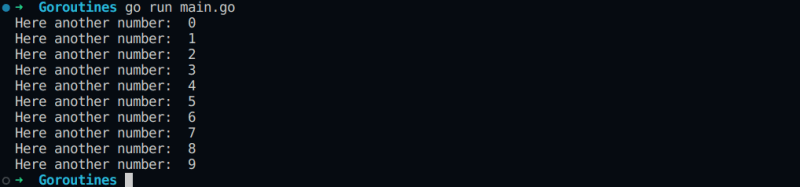

As we have seen, it is essential that the runtime waits for the execution of goroutines before terminating the program. To achieve this, we need to signal this necessity. We import the sync module and will use the WaitGroup class. This class allows us to create a kind of management order, in which we can request to wait for the program to terminate while there is any element being executed. To do this, we will perform operations of adding and removing elements.

Initially, we will create a variable in the scope of the main function to hold the WaitGroup class. Then, we will add each element of the iteration to a waiting list. After the element is printed, we will remove this item from our list. When all elements have been printed and the list is empty, our program will terminate. At the end of the main function, we will use the Wait method, which indicates that the runtime should wait for the task list to be completed before terminating the program execution.

Our resulting code would look like this:

package main

import (

"fmt"

"strconv"

"sync"

)

func main() {

var wg sync.WaitGroup

for i := 0; i < 10; i++ {

wg.Add(1)

go printMessage(strconv.Itoa(i), &wg)

fmt.Println("Here another number: ", i)

}

wg.Wait()

}

func printMessage(i string, wg *sync.WaitGroup) {

fmt.Println(i)

defer wg.Done()

}

When executing the code again with our command "run go main.go" we see that all items in the iteration were executed within the printMessage function and in the common println.

When we observe the result, despite the function and println being called, we can see that there is some disorganization in the printouts when it comes to the order of the asynchronous object. This occurs because there is a competition for resource consumption, and a goroutine can be called and finished in the midst of another. To avoid this problem, we use a lock on that memory resource and release it with an unlock when it is finished.

To block and release access to the resource, we will also use the sync package but will use the Mutex class.

Hint: Mutex (Mutual Exclusion) guarantees that only one goroutine can access that resource at a time.

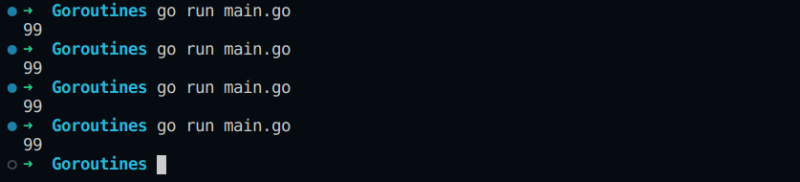

We will use a code similar to our first one, but it will only perform a '+1' addition in each iteration. Before passing the argument, we will lock the memory access so that only the goroutine in question can access that resource. After accessing the resource and performing the sum, we will release the access so that the next goroutines can access it.

package main

import (

"fmt"

"sync"

"time"

)

func main(){

var mu sync.Mutex

i :=0

for x:=0; x<100; x++{

mu.Lock()

i = x

go sumNumber(i)

mu.Unlock()

}

time.Sleep(time.Second*2)

fmt.Println(i)

}

func sumNumber(i int){

i++

}

According to the image above, we can see that the result is always the largest object of the iteration, indicating that it occurred in an ordered manner regardless of how many times the main function is called. This ensures that the goroutines occur in a predictable and safe way.

However, there is a downside to using this type of mechanism. For example, just as multi-threaded systems were developed to increase execution speed, goroutines also aim to execute different parts of a code concurrently. By limiting execution to one at a time, the results obtained from the bus of multiple simultaneous threads can sometimes become quite slow.

There are other factors that directly affect the performance of a process:

- Each Lock/Unlock operation requires communication from the CPU with the machine's OS.

- Risk of one or more threads being idle, waiting for the execution of another indefinitely (DEADLOCKS).

- Managing the access of threads and goroutines simultaneously for a long time (remember that threads by default share the same resources?)

Therefore, the manual control of goroutines using Mutex must be done with great care where the load at that point in the application should be considered, as well as whether the order of execution really matters more than the speed of execution.

Channels

Although it is possible to manipulate memory access and perform wait calls, there is a simpler way to use data from a goroutine through channels. Channels are tunneling data structures where data from one goroutine can be sent to others. Through this structure we can:

- Define the data type of the channel

- Use FIFO to ensure data ordering

- Have unidirectional (Read or Write) or bidirectional (Read and Write) channels

- Perform blocking operations

To create a channel, we will initialize it as empty with the type int using the make command. With that, we will create a function named createList which will receive the channel as an argument and write 10 integer numbers into it obtained through a simple iteration, and then we will close it using the close method.

Hint: If the channel is not closed, there will be a fatal error of deadlock.

With our channel filled, we will use it for reading in a simple iteration within the main function.

package main

import (

"fmt"

)

func main(){

channel := make(chan int)

go createList(channel)

for x := range(channel){

fmt.Println(x)

}

}

func createList(channel chan int){

for i :=0; i<100; i++{

channel <-i

}

close(channel)

}

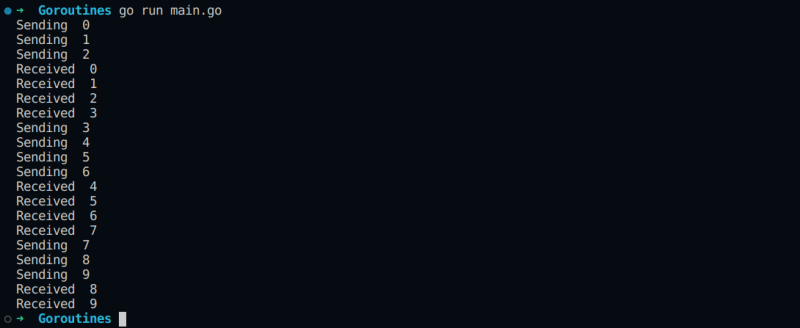

After use go run main.go we have this result:

By default, a channel receives one data at a time, that is, a goroutine can send one data at a time to another goroutine, the second one must receive the data before the first goroutine sends another data.

Let's just add a println on line 12 and 19 and we will see the following sequence.

In the previous example we used an unbuffered channel, however we can define "data packets" to be sent at once through the buffering process.

To do that we need only set the size of our channel in the make method like this channel := make(chan int, 2) and running your code again we have the following result.

This way we are able to send data packets of different sizes between our goroutines.

This was a brief tutorial to help us understand a little how goroutines work in Golang and review some essential computing concepts. If you have any questions, feel free to comment below =D.

...

800+ IT

News

als RSS Feed abonnieren

800+ IT

News

als RSS Feed abonnieren